AI rears its ugly head again with people easily “fooled,” as an Australian study shows the tech creates Caucasian faces more real than actual humans.

While the technology has made it easier for anyone to be an artist, the same technology has pitfalls that have divided opinion.

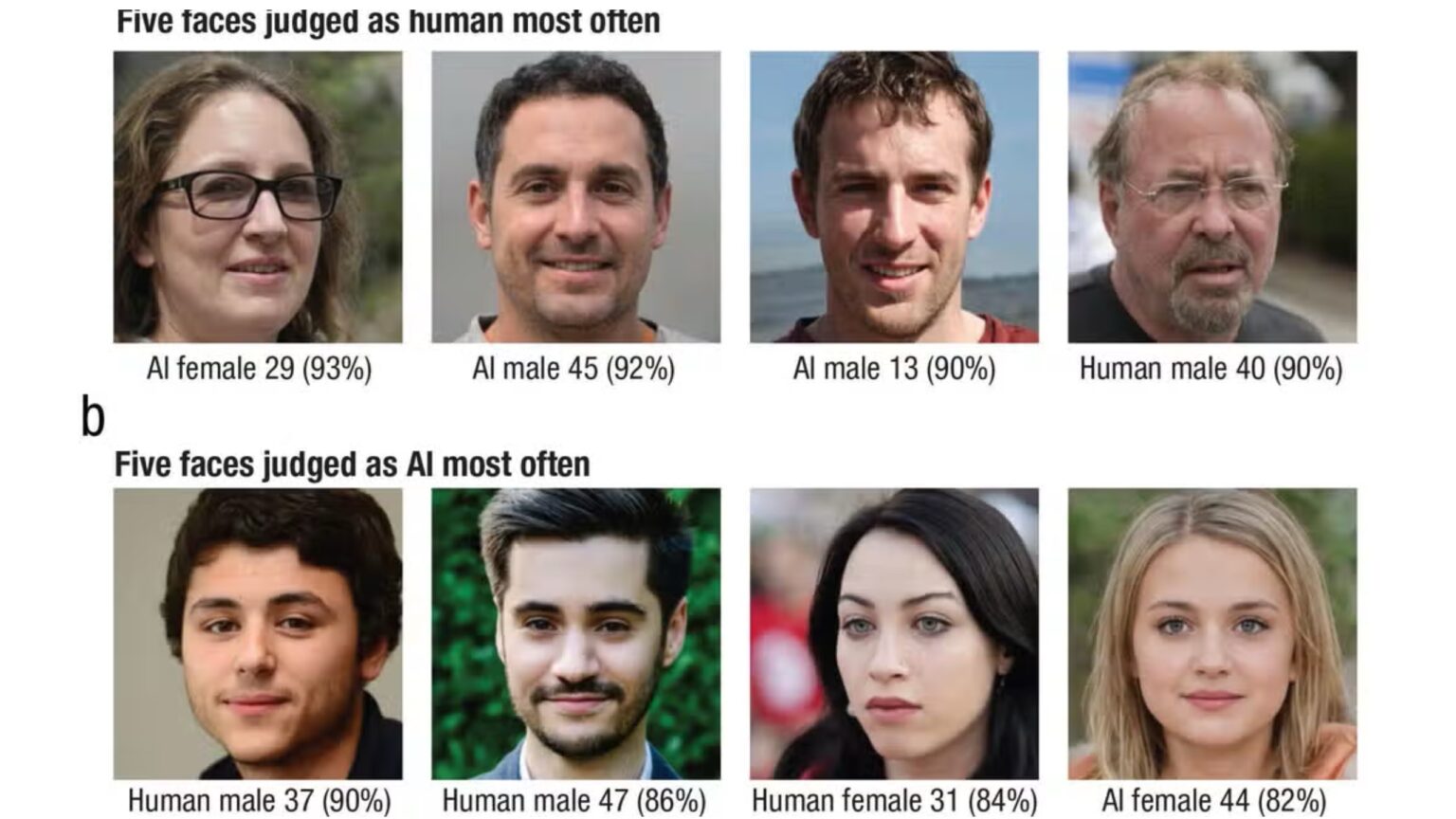

According to a study led by researchers at the Australian National University and published in Psychological Science, people thought AI-generated white faces were human and more real as opposed to the faces of actual humans.

The racial bias

However, this was not the case with people of color raising racial concerns with AI technology. The technology has since been labeled racially biased as well as carrying biases of various forms against certain groups of people.

“If white faces are consistently perceived as more realistic, this technology could have profound implications for people of color by ultimately reinforcing racial biases,” said Dr. Amy Dawel, a senior author of the paper.

Dr. Dawel explained that the glaring difference between the AI-generated faces of white people and those of blacks was a result of the algorithms that were trained disproportionately.

“This problem is already apparent in current AI technologies used to create professional-looking headshots.

“When used for people of color, the AI is altering their skin and eye color to those of white people,” said the researchers.

In a separate but related case, Arsenii Alenichev, a postdoctoral fellow with the Oxford-Johns Hopkins Global Infectious Disease Ethics Collaborative, was experimenting with an AI image generator. When he asked for a picture of a black doctor assisting poor white kids, the pictures always depicted black kids and white doctors, despite the specifications.

Other images of black scientists would have wildlife like giraffes next to them. Other attempts have shown scary and disfigured “images” of black women “smiling”.

A Bloomberg report suggests AI models also have the capacity to perpetuate stereotypes and may subject the marginalized to undesirable situations.

Also read: Saudi Arabia Launches International Center for AI Ethics

The trickery

ANU PhD candidate and co-author of the study, Elizabeth Miller, said they found that most people who participated in the survey were confident about their answers, mistaking AI-made faces for real ones.

“This means that people who are mistaking AI imposters for real people don’t know they are being tricked,” she said.

According to The Standard, although there were some differences, people were still fooled, and Dr. Dawel indicated that more in-proportion faces were “typical signs that AI had generated the face.”

But the surveyed people mistook it as a sign of humanness.

“We can’t rely on these physical cues for long. AI technology is advancing so quickly that the difference between AI and human faces will probably disappear soon,” she said.

She added that this could be problematic and carry huge implications with regards to online misinformation, deepfakes, and identity theft.

Misinformation and fraudsters

Dr. Dawel added that with this lack of distinction between an AI face and a real one, there is a need for people to be cautious or risk being duped by fraudsters.

“Given that humans can no longer detect AI faces, society needs tools that can accurately identify AI imposters,” she said.

“Educating people about the perceived realism of AI faces could help make the public appropriately skeptical about the images they’re seeing online.”

Some experts in generative AI predict that as much as 90% of online content could be AI-generated in the next few years.

Already, with the rise of generative AI and its ability to create text, images, audios, and videos, there has been an increase in cases of people being duped into revealing their credit card numbers.

Fraudsters also dupe unsuspecting people into sending money to strangers who pretend to be family members or close friends in trouble and in need of urgent help.

and then

and then