If you have been on social media this week, you have probably come across photos of AI-generated images of your friends as fairy beings, animé characters, avatars, and magic beings.

This is in part due to Lensa, an AI that synthesizes digital portraits based on photos users upload. The app’s portraits practically took over the internet, with Lensa becoming the most downloaded app in Apple’s app store.

Lensa, like all AI applications that digitally render images has courted both admiration and controversy for its seemingly blatant sexualisation of female images. Other users noted that the app made their skin paler or their bodies thinner.

Also read: AI Tech That Creates Life-Wrecking Deep Fake Images

How to get your own ‘magic avatar’

The images doing the rounds are a proud creation of Lensa’s magic avatars function. To get a feel of it, one has to download the Lensa app on a phone. An annual subscription is around $35.99 but its services are also available albeit with limitations for free on a weeklong trial if one wants to check it out first.

However, generating the popular magic avatars requires an additional fee because of what the app says is “tremendous computational power” to achieve.

On a free trial, one can get 50 avatars for $3.99, and 200 avatars for $7.99. In order to get the best results, the app encourages users to upload at least 20 close up images.

Ideally, these images should be close-ups of one’s face with an array of dissimilar backgrounds, facial expressions, and angles. The application insists users should be 13 and older. Lensa is not an entirely new application.

A product of Prisma, the application first became popular in 2016 thanks to a function that allowed users to alter their selfies into photos in the style of famous artists.

How does Lensa work?

According to the company, it makes use of what it calls “TrueDepth API technology” where a user provides photos, or “face data,” then AI is then trained on its algorithms to perform better and show you better results. Training is when the AI processes data, validates and tests the models.

In order to use the app one can curate 20 selfies with a variety of expressions and angles and chose the 100 avatar option.

It takes about 20 minutes to do the job. Once done, the AI returns avatars that fall into 10 categories such as fantasy, fairy princess, focus, pop, stylish, animé, light, kawaii, iridescent, and cosmic.

“In general, I felt like the app did a decent job producing artistic images based on my selfies. I couldn’t quite recognize myself in most of the portraits, but I could see where they were coming from,” Zoe Sottile of CNN wrote.

“It seemed to recognize and repeat certain features, like my pale skin or my round nose, more than others. Some of them were in a more realistic style, and were close enough I might think they were actually photos of me if I saw them from afar. Others were significantly more stylized and artistic, so they felt less specific to me.”

Sottile noticed the AI also made her lighter.

As for me, I also sensed that it automatically made me lighter and the image I tried in my gallery of me and a friend who is lightly darker skinned returned a somewhat much lighter version of us, clearly an exaggeration, and exposed an inclination to lighten black skin tones.

Sexualizing women

Others who used it had more or less similar concerns.

Women say the AI is quick to sexualize their images. But in an earlier article, we explained this has been made possible by the huge number of sexualized images found in data sets used in AI training.

In other words, this means that the AI is all too familiar with how to generate those images and easily go pornographic. With a bit of trickery, it can be prompted to unwittingly produce porn from those images as well if a user wants.

sexualising a young woman who spent most of her time in the public eye wearing baggy clothes just to avoid this type of sexualisation. ban men from using AI https://t.co/YnNCurvYDS

— lynchian joan of arc (@postnuclearjoan) December 13, 2022

In other stories, we have covered how AI:s can be fooled into providing information on how to make a bomb for instance. Strangely, the issue of sexualization didn’t appear in images of men uploaded to the Magic Avatar feature. For MIT Technology Review, Melissa Heikkilä wrote,

“My avatars were cartoonishly pornified, while my male colleagues got to be astronauts, explorers, and inventors.”

Sottile, on the other hand, noticed that the AI had “in one of the mostdisorienting images” made her look “like a version of my face was on a naked body.”

“In several photos, it looked like I was naked but with a blanket strategically placed, or the image just cut off to hide anything explicit,” she said.

“And many of the images, even where I was fully clothed, featured a sultry facial expression, significant cleavage, and skimpy clothing which did not match the photos I had submitted,” Zoe Sottile adds.

Others expressed fears they would be made porn stars by AI technology like Lensa.

ALERT: Anybody in the world can have your NON-CONSENSUAL PORN now !!

People are in awe of generative AI tools like Lensa without understanding the dire consequences.

🧵 WORST PART? here's what companies don't want you to know: pic.twitter.com/gdS0h1FbXj

— Kritarth Mittal | Soshals (@kritarthmittal) December 14, 2022

Body shaming

For fully bodied women, the experience was somewhat different and even worse in some instances. The AI made them thinner and sexy.

“For Lmfao if you got Body Dysmorphia don’t use that Lensa app for the AI generated pics. This is your warning,” a user wrote.

Another said the app had made her look Asian.

Tried the AI Lensa app. Tell me why AI made me look more Asian than ever? 😂😂😂😂 pic.twitter.com/lveJNXaXHO

— Kristina🌴🌊🐚 (@kut1e) December 9, 2022

Another user took to twitter to complain that he had paid $8 to experience body dysmorphia when he used the AI.

paid $8 to Lensa just to experience body dysmorphia pic.twitter.com/NRYfh5Jyej

— garrett (@dollhaus_x) December 3, 2022

Body dysmorphia is a mental health condition where a person spends a lot of time worrying about flaws in their appearance. These flaws are often unnoticeable to others.

Another complained that the AI automatically shed off significant weight on her otherwise full figure images.

“One complaint I have about Lensa AI is it will make you skinny in some images. As a fat person those images really bothered me. So careful not to get triggered if you’re a fellow fatty with no interest in becoming skinny,” Mariah Successful (@Shlatz) wrote on December 5, 2022.

Psychological time bomb

And psychologists concur with her statements that the AI could trigger fully figured women.

A clinical psychologist, Dr. Toni Pikoos, an Australian based mental health practitioner, who does research on and specializes in treating body dysmorphic disorder, believed the application could do more harm than good and is nothing but a “photo-filtering tool” to alter one’s self-perception.

“When there’s a bigger discrepancy between ideal and perceived appearance, it can fuel body dissatisfaction, distress, and a desire to fix or change one’s appearance with potentially unhealthy or unsafe means” like disordered eating or unnecessary cosmetic procedures, Pikoos says.

She expressed concern the images erased “intricate details” such as “freckles and lines”, something that could heighten worries about one’s skin. This could also psychologically trigger a vulnerable person, she says.

“To see an external image reflect their insecurity back at them only reinforces the idea ‘See, this is wrong with me! And I’m not the only one that can see it!’” says Pikoos.

Owing to the fact that the AI introduces its own features that don’t depict a user’s real life appearance, the app could create new anxieties, he says.

She says the AI’s “magic avatars” were “particularly interesting because it seems more objective — as if some external, all-knowing being has generated this image of what you look like.”

This, she feels dould be actually “useful” for people with body dysmorphic disorder and help shed light on a “mismatch” between an “individual’s negative view of themselves and how others see them.”

She noted however that the AI wasn’t objective because of its attempt to depict a flawless and more “enhanced and perfected version” of one’s face.

For instance, someone experiencing body dysmorphic disorder, or BDD, “may experience a brief confidence boost when they view their image, and want to share this version of themselves with the world,” she says, but will be hard hit by reality when off screen, unfiltered, in the mirror or a photo that they take of themselves.”

Defending its own

Andrey Usoltsev, CEO of Prisma Labs, says his company is currently “overwhelmed” with inquiries about Lensa and offered a link to an FAQ page that addresses queries of sexualized imagery, though not the kind of user reactions Pikoos describes.

“Seeing this reflected in the app would be very confronting and provide a kind of ‘confirmation’ for the way that they see themselves”, leading them to become “more entrenched in the disorder.”

Stable Diffusion

Lensa also uses Stable Diffusion, which uses deep-learning synthesis that can create new images from text descriptions and can run on a Windows or Linux PC, on a Mac, or in the cloud on rented computer hardware.

Stable Diffusion’s neural network has with the help of intensive learning mastered to associate words and the general statistical association between the positions of pixels in images.

We covered in another story how the technology could have life-wrecking consequences by rendering and depicting images of people to appear to be criminals or to be involved in less than flattering practices such as theft.

For instance, one can give the open sourced Stable Diffusion a prompt, such as “Tom Hanks in a classroom,” and it will give him/her a new image of Tom Hanks in a classroom. In Tom Hank’s case, it is a walk in the park because hundreds of his photos are already in the data set used to train Stable Diffusion.

Artists getting raw deal too

On the art front, some artists are unhappy.

They are concerned the AI could threaten their livelihoods. This is because artists, including digital ones, also cannot produce as fast as AI for a digital portrait.

I’m cropping these for privacy reasons/because I’m not trying to call out any one individual. These are all Lensa portraits where the mangled remains of an artist’s signature is still visible. That’s the remains of the signature of one of the multiple artists it stole from.

A 🧵 https://t.co/0lS4WHmQfW pic.twitter.com/7GfDXZ22s1

— Lauryn Ipsum (@LaurynIpsum) December 6, 2022

Lensa’s parent company, Prisma has attempted to massage the concerns about its technology eliminating work for digital artists.

“Whilst both humans and AI learn about artistic styles in semi-similar ways, there are some fundamental differences: AI is capable of rapidly analyzing and learning from large sets of data, but it does not have the same level of attention and appreciation for art as a human being,” wrote the company on Twitter on December 6.

It says “the outputs can’t be described as exact replicas of any particular artwork.”

Is this AI generated art or AI copied art? Is Lensa AI Stealing From Human Art? An Expert Explains The Controversy https://t.co/cxi2t6URC7 pic.twitter.com/20xJgbHZgA

— John Chapman (@JChapman1729) December 15, 2022

Altering self image

Kerry Bowman, a bioethicist at the University of Toronto, says the AI has the potential to negatively affect one’s self-image, among other ethical issues.

“In some ways, it can be a lot of fun but these idealized images are being driven by social expectations which can be very cruel and very narrow,” Bowman said on Monday.

Bowman said these AI programs make use of data set sources such as the internet in search of different art styles to create these portraits. The downside is that when AI does that, artists are rarely remunerated financially for use of their work or credited.

“What happens with emerging AI is that the laws have not been able to really keep up with this in terms of copyright law. It’s very difficult and very murky and ethics is even further behind the laws because I would argue that this is fundamentally unfair,” Bowman said.

Personal Data Concerns

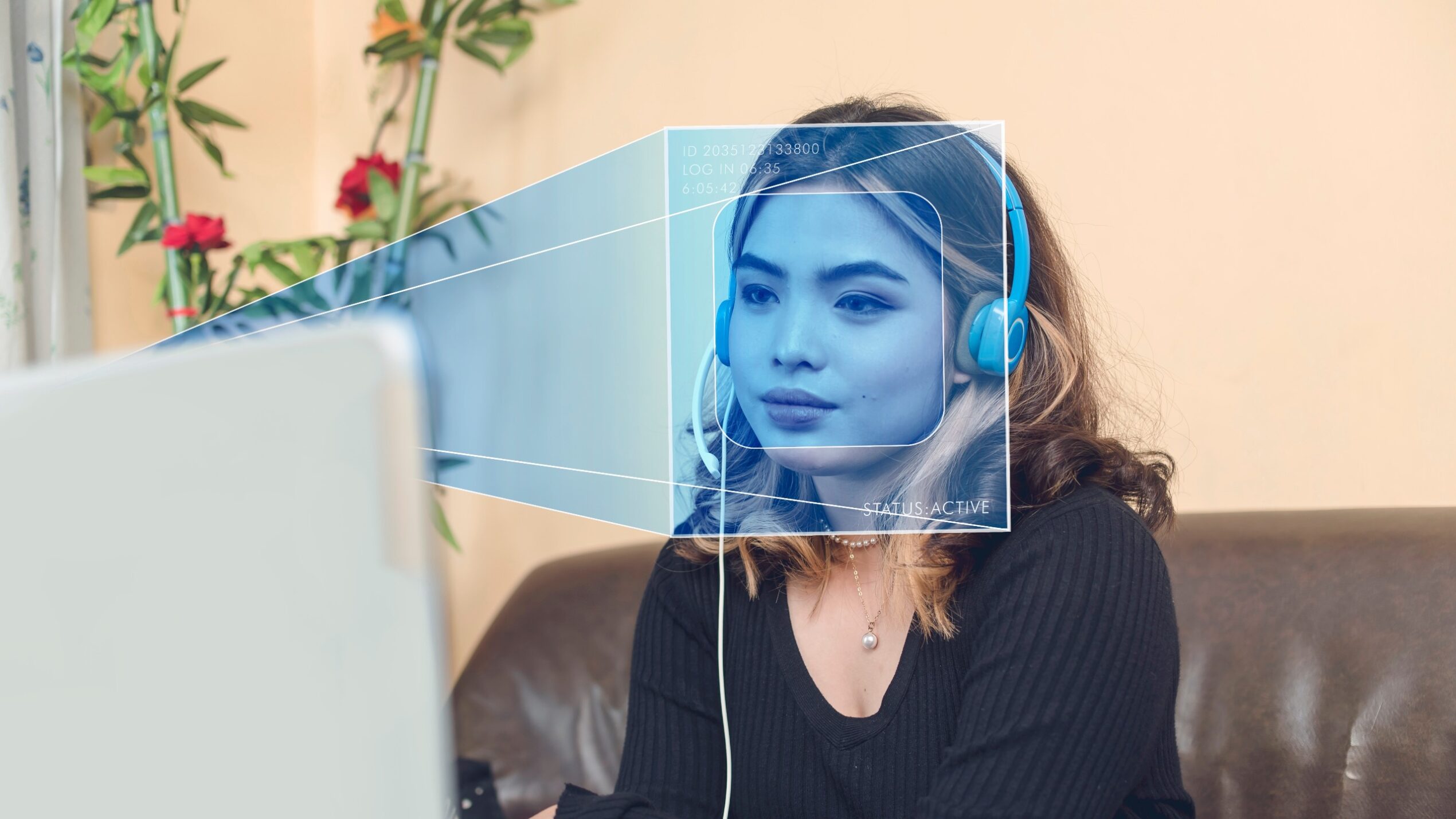

Bowman also raised concerns how people’s personal data is stored.

“Do you really want your face in a large database? People need to decide for themselves about this but it’s not benign, there’s not nothing to this, it’s not just fun,” he said.

Lensa says photos are kept on servers and in apps for no more than 24 hours. With deep learning and machine learning algorithms, the AI gathers and then delivers better results in the future, also based on the deleted data. This, Bowman says, will result in safety concerns over the potential use of facial recognition as this type of data could be used by the police illegally.

and then

and then