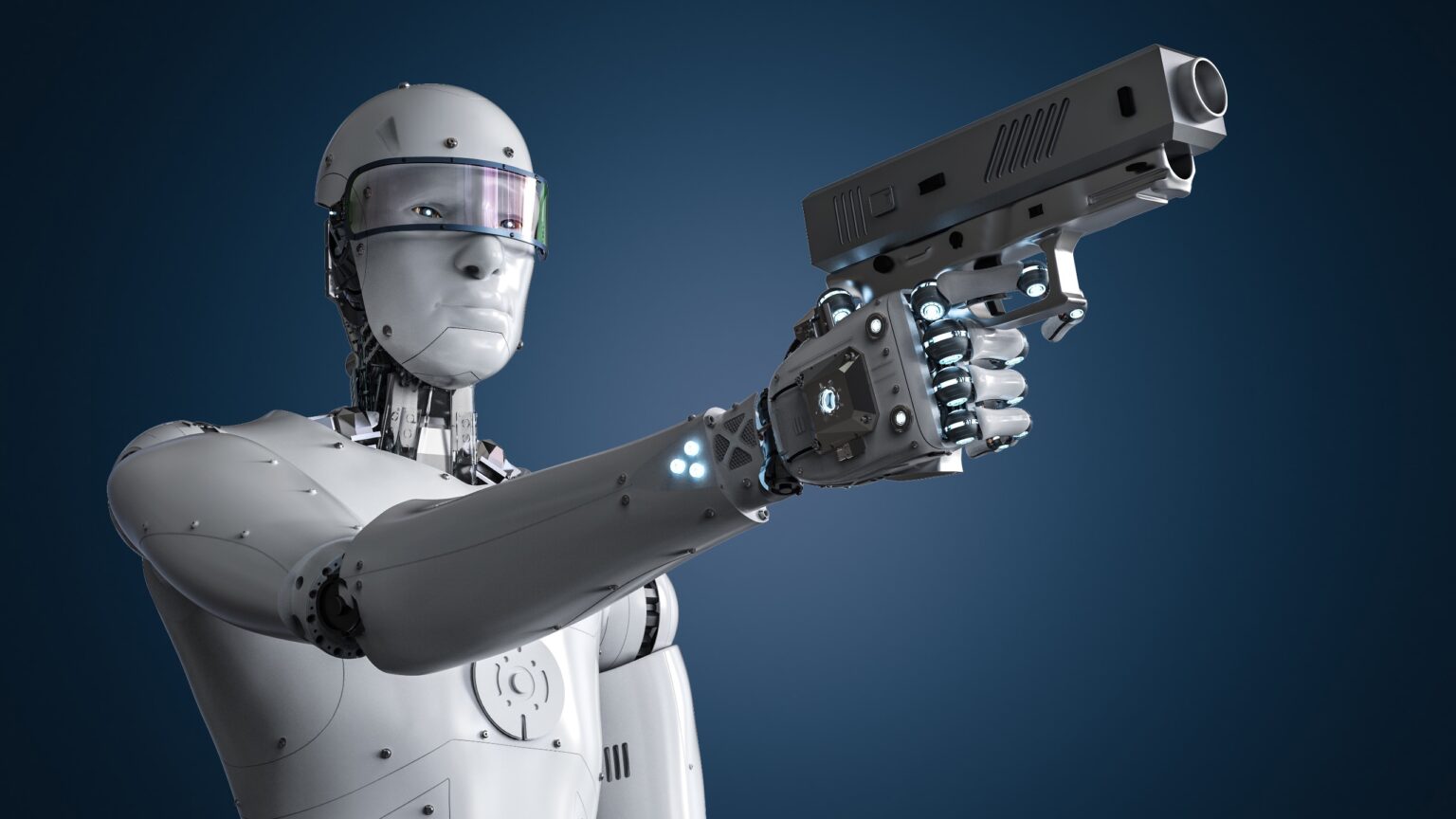

The alarming rate of gun violence in the United States in 2023 has led to increased calls for more gun safety measures. But with that unlikely to happen anytime soon, individuals and organizations have been turning to other alternatives, including artificial intelligence (AI).

In recent years, artificial intelligence has emerged as one of the possible solutions. The growing interest in the technology is primarily due to its promise of detecting shooters and preventing violence.

The CEO of Omnilert, a security company, Dave Fraser, said the technology represents a breakthrough in how AI is used to further human protection.

How AI helps

Most of the technologies currently offered by security companies using AI rely on detection by using high-tech cameras to identify suspects, predictive algorithms that flag potential shooters, and metal detectors capable of seeing hidden guns.

According to companies in the sector, using security cameras equipped with AI can make up for the errors of security officers. Watching multiple video screens while trying to identify threats leaves margins for error.

But artificial intelligence boasts better accuracy, distinguishing between identifying potential mass shooters minutes or seconds before they strike.

Given how ubiquitous security cameras are today, improving them with AI to become more effective in preventing mass shootings seems like a no-brainer.

The challenges

But some experts are concerned about how the impacts on privacy, especially since the effectiveness of the products remains questionable. Most AI security companies don’t provide independently verified data about the accuracy of their products.

Speaking to ABC News, the senior policy analyst at ACLU Speech, Privacy, and Technology Project, Jay Stanley, said:

“If you’re going to trade your privacy and freedom for security, the first question you need to ask is: Are you getting a good deal?”

Besides that, a lawyer for the Electronic Privacy Information Center in Washington, Jake Wiener, said:

‘The biggest concern with these systems is false positives when the system wrongly identifies someone who isn’t actually holding a gun.”

A false positive is a situation where an innocent individual can be wrongly profiled as a mass shooter.

Market rises for AI tools

But such misgivings are unlikely to deter interest in the sector. The high rate of mass shootings in recent years has led potential targets, such as schools, offices, retailers, etc., to consider AI security.

Data from the National Center for Education Statistics show that 83% of public schools use security cameras as of the 2017-18 school year. The Southeastern Pennsylvania Transportation Authority has recently begun an artificial intelligence-based gun detection program.

With the rising interest, Future Market Insights projects that the market for high-tech products capable of detecting concealed weapons will be worth $1.2 billion by 2031, almost twice the $630 million it was in 2022.

Meanwhile, several companies are already establishing themselves in the sector. One of them is Austin-based Scylla which offers AI that helps security cameras identify concealed weapons and suspicious activity.

The system notifies officials when it identifies any threat and can immediately lock doors and deny access. The vice president of the company Kris Greiner noted that such a system could have a significant impact on ensuring safety. Other companies, such as Zero Eyes, focus on gun detection.

and then

and then