AI startup Runaway announced on Tuesday that it is granting all users access to its Gen-1 artificial intelligence video generator, allowing them to create videos from images and text prompts with ease. Until now, the tool was only available to a small number of users.

In a tweet, Runway, a web-based, machine learning-powered video editor, said all content creators can now use Gen-1 to “generate new videos out of existing ones with an image or text prompt.” The company unveiled Gen-1 in February, its first AI video editing model.

The software can make new videos using data from existing uploaded videos, using image or text prompts to add effects. While the final video could retain aspects of the original, it would be stylized differently.

The wait is over.

Gen-1 is now available at https://t.co/ekldoIshdw pic.twitter.com/Wm2YVOvm26

— Runway (@runwayml) March 27, 2023

What can you do with Gen-1?

Runway has been at the forefront of AI-powered creative assets, with over 30 tools that help users ideate, generate, and edit content. The New York-based company helped to create the popular Stable Diffusion AI image generator last year.

According to Runway, Gen-1 can turn a realistically filmed scene into an animated render while still retaining the “proportions and movements of the original scene.” Users can also edit video by isolating subjects in said video and modifying them with simple text instructions.

Gen-1 comes with five different modes for styling your videos:

Stylization: Transfer the style of any image or prompt to every frame of your video.

Storyboard: Turn mockups into fully stylized and animated renders.

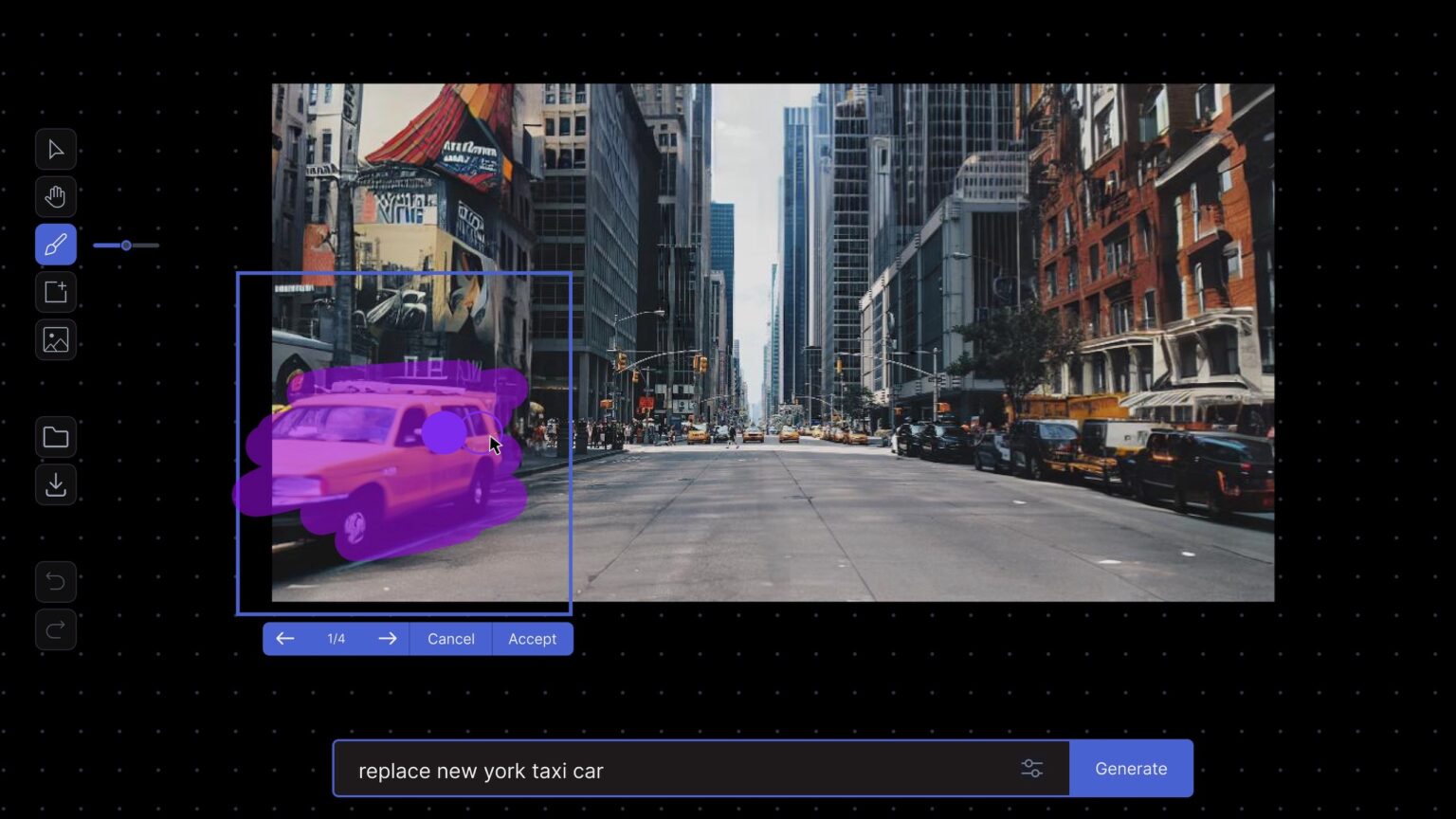

Mask: Isolate subjects in your video and modify them with simple text prompts.

Render: Turn untextured renders into realistic outputs by applying an input image or prompt.

Customization: Lastly, users can customize Gen-1 to create exactly what they wish using more options.

Getting started with Gen-1

If you wish to use Gen-1 to make videos from existing images and text prompts, here is how you could go about it. First, you need to sign up for Runway. Go to the company’s website, create an account and log in.

Once there, navigate to “Gen-1: Video to video” in AI Magic Tools. Then, select or upload an input video. You can upload multiple videos or images all at once. There are at least three methods you can choose from:

Driving Image: Select or upload a reference photo to transfer the style of the image to your input video.

Text Prompt: You can add a text prompt describing the style you want to be applied to the video you uploaded. Multiple text prompts can also be added to generate multiple videos.

Presets: Choose one of the available preset styles to apply to your input video.

When you’ve added your text prompts, click on the “generate” button to create. Gen-1 will use your images and text inputs to generate the desired videos. The process may take a few minutes, depending on the number of images and text prompts you have uploaded.

Videos can be downloaded separately or all at once, and it is possible to edit videos after downloading, using video editing software provided on the platform. You can add music, sound effects, and text overlays to make videos more engaging.

Turn first thoughts into finished films. With Gen-1.

Now available at https://t.co/ekldoIshdw pic.twitter.com/vlnO73BGYT

— Runway (@runwayml) March 28, 2023

Gen-1 comes with a free option that allows you to create up to three video projects. But if you want to do more with Runway, you’ll have to pay $12 per month for the standard service and $28 per month for the pro subscription service.

Runway’s AI development

Runway was founded by artists who aimed “to bring the unlimited creative potential of AI to everyone, everywhere with anything to say.” As MetaNews reported, the company announced last week the release of Gen-2, a more advanced AI model than Gen-1.

Also read: AI Poses a Threat to Democracy, Experts Warn

Gen-2 is a multi-modal AI system that can generate novel videos with text, images, or video clips. It has two modes: text-to-video mode, which allows users to create videos of any style by providing a text prompt, and “text + Image to video,” which generates a video from text and image prompts.

Gen-2 combines all the capabilities of its predecessor. It is based on studies on generative diffusion models by Runway research scientist Patrick Esser, published by Cornell University last month.

and then

and then