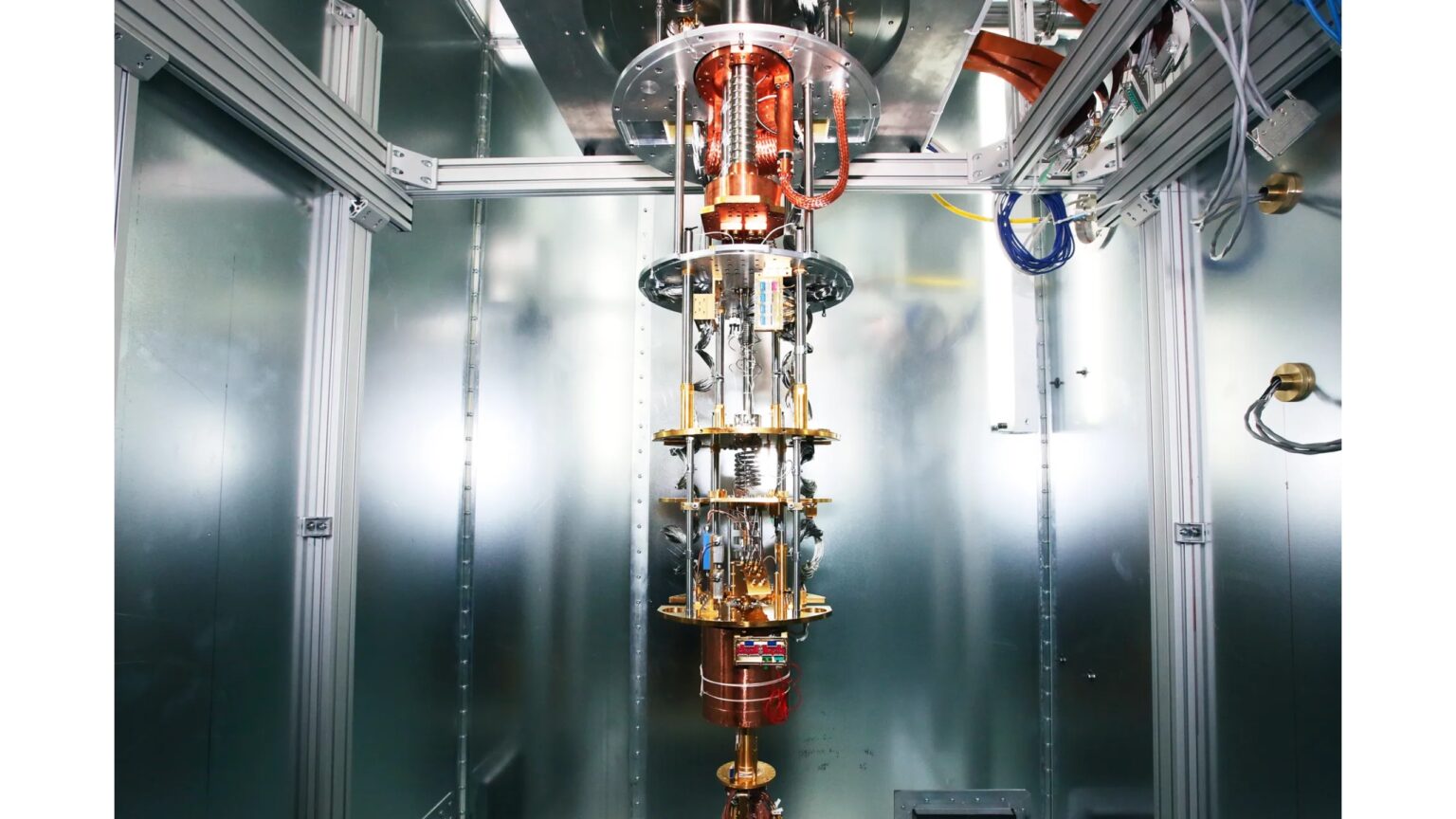

Alphabet Inc’s Google has shared information about supercomputers it uses to train AI models, claiming they are power-efficient and faster than Nvidia’s A100 chip. Google has produced its custom chip called the Tensor Processing Unit (TPU), which is in its fourth generation.

According to the tech giant, the company is using the chips for more than 90% of the company’s AI training work. Google adds the chip feeds data through models to make them practical at tasks such as human like texts or to generate images.

Ideally, TPUs are designed to accelerate the inference phase of deep neural networks (DNNs), which are used in many machine learning applications such as image recognition, speech recognition, natural language processing, and more. TPUs are also used for training DNNs.

Also read: Twitter in Legal Hot Water as Germany Threatens Fine

On Tuesday Google published a scientific paper explaining how it has strung more than 4 000 of the chips. According to the firm, it used custom developed optical switches to get individual machines in one place.

In the scientific paper, Google said that for comparably sized systems, its chips are up to 1.7 times faster and 1.9 times more power efficient than a system based on Nvidia’s A100 chip that was on the market at the same time as the fourth generation TPU.

More improvements required

Analysts are of the view that the market for data inference chips will grow quickly as businesses put AI technologies into their products. Firms like Google are however, already working on how to keep the lid on the extra costs that doing so will add, and one of the costs being electricity.

Large language models that drive products such as Google’s Bard or OpenAI’s ChatGPT have vastly grown in size. In-fact they are far too large to store on a single chip.

As such, improving these connections has become a key point for competition among companies that build AI supercomputers.

Additionally, these models are split across thousands of chips and work together for weeks or more to train the model.

Google’s most significant publicly disclosed language model to date, PaLM, was trained by splitting it across two of the 4 000 chip supercomputers over 50 days.

According to the firm, its supercomputers make it easy to configure connections between chips on the fly.

“Circuit switching makes it easy to route around failed components,” said Google Fellow Norm Jouppi and Google Distinguished Engineer David Patterson in blog post about the system

“This flexibility even allows us to change the topology of the supercomputer interconnect to accelerate the performance of an ML (machine learning) model.”

There is no comparison according to Google

Nvidia dominates the market for training AI models with huge amounts of data. However, after those models are trained, they are put to wider use in what is called “inference” by doing tasks like generating text response to prompts and deciding whether an image contains a cat.

Major software studios are currently using Nvidia’s A100 processors. The A100 chips are the most commonly used chips that development studio’s use for AI machine learning workloads.

The A100 is suited for machine learning models that power tools like ChatGPT, Bing AI, or Stable Diffusion. It’s able to perform many simple calculations simultaneously, which is important for training and using neural network models.

While Nvidia turned down requests for comment by Reuters, Google said they did not compare their fourth generation to Nvidia’s current flagship H100 chip because it came to the market after Google’s chip, and is made with newer technology.

Google also said the company has “a healthy pipeline of future tips,” without giving finer details, but hinted that it might be working on a new TPU that will compete with Nvidia H100.

Although Google is only releasing details about its supercomputer now, it has been online inside the company since 2020 in a data center in Mayes County, Oklahoma.

Google said that startup Midjourney used the system to train its model, which generates fresh images after being fed a few words of text.

and then

and then