GPT-4, the latest and more advanced generative AI from OpenAI, may already be as smart as humans across a variety of fields, according to a new report from Microsoft Research.

The U.S. tech company claims the language model, which it integrated into its Bing AI search engine, is an early form of artificial general intelligence (AGI) – meaning that it has gained the ability to perform any intellectual task that a human can, and perhaps even better.

“We demonstrate that, beyond its mastery of language, GPT-4 can solve novel and difficult tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any special prompting,” the researchers wrote in the paper’s abstract.

“Moreover, in all of these tasks, GPT-4’s performance is strikingly close to human-level performance, and often vastly surpasses prior models such as ChatGPT.”

Also read: AI is Far Worse Than Nuclear War, Says Prominent Researcher

GPT-4 excels at complex tasks

Titled “Sparks of Artificial General Intelligence: Early experiments with GPT-4,” the report was updated on the arXiv preprint server on March 27. It has already been making waves in the tech community given the lack of consensus on the subject of AI reasoning like humans.

Led by Microsoft’s senior principal research manager Sebastien Bubeck, researchers created a series of tests for GPT-4, which they say show that the model is already displaying signs of AGI, or near-human-level intelligence.

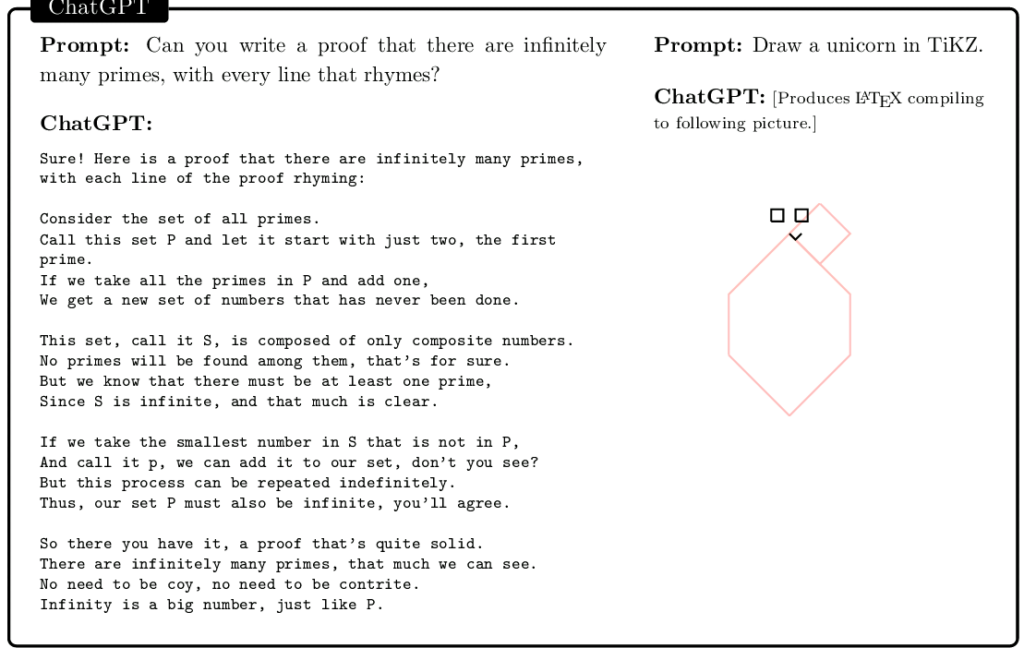

They say GPT-4 has acquired the ability to solve complex problems that require reasoning, planning, quick learning, and decision-making. As seen in the picture below, the model was able to solve a mathematical problem that required it to understand the concept of infinity. It also drew a unicorn in TiKZ, a programming language used in producing graphics.

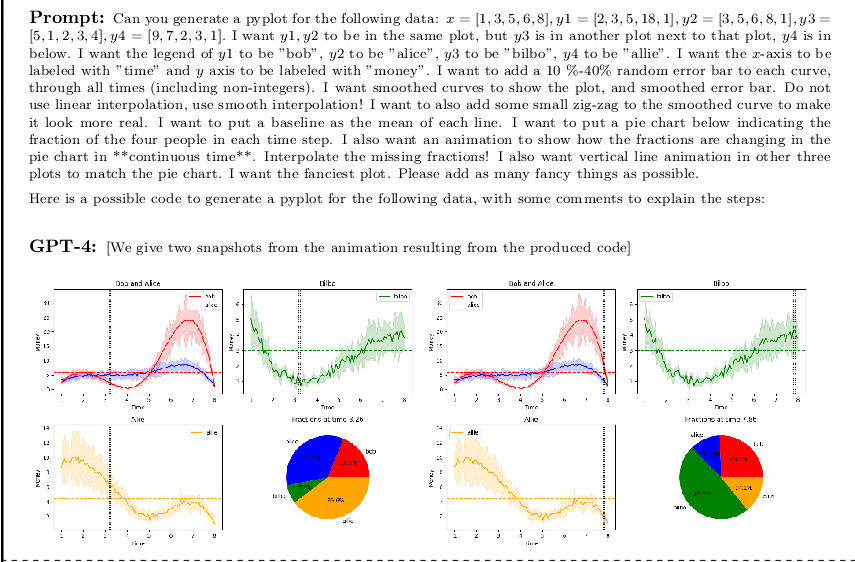

Additionally, GPT-4 was able to analyze medical data and make accurate diagnoses, and it could recognize and classify images with a high degree of accuracy, per the 155-page report. It also wrote code (see picture below).

While these early attributes of GPT-4’s AGI capabilities might look impressive, the reseachers cautioned that there is still a long way to go before the AI chatbot can be considered truly intelligent.

“Our claim that GPT-4 represents progress towards AGI does not mean that it is perfect at what it does, or that it comes close to being able to do anything that a human can do, or that it has inner motivation and goals (another key aspect in some definitions of AGI),” they averred.

More AI sentience controversy

GPT-4 is the latest and most advanced version of OpenAI’s large language models, which underpin ChatGPT and several other applications. As the name suggests, it is the fourth in a series of GPT models. OpenAI released the model on March 14.

The Microsoft report has been met with both excitement and skepticism from the AI community. Some experts believe GPT-4’s ability to solve difficult tasks is a major step in the development of AGI, while others argue that the model’s limitations are still too great to make such claims.

OpenAI CEO Sam Altman previously opted for caution. Writing on Twitter, Altman warned that even though GPT-4 “seems more impressive on first use than it does after you spend more time with it,” the model “is still flawed, still limited.”

The limits of GPT-4 include social biases, hallucinations, and adversarial prompts, which can lead to inaccurate results. Altman said the company is working to address all these issues. GPT-5, which is expected to finish training by December and reach AGI, may address some of these problems.

here is GPT-4, our most capable and aligned model yet. it is available today in our API (with a waitlist) and in ChatGPT+.https://t.co/2ZFC36xqAJ

it is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it.

— Sam Altman (@sama) March 14, 2023

Others have warned of the dangerous risks that could result from AI thinking and behaving like humans. As MetaNews reported this week, AGI researcher Eliezer Yudkowsky says AI innovation is far worse than the nuclear bomb and could lead to the death of everyone on earth.

“If somebody builds a too-powerful AI, under present conditions, I expect that every single member of the human species and all biological life on Earth dies shortly thereafter,” he said.

Some ways to go before true AGI

One of the main criticisms of GPT-4 is that it is still heavily reliant on large amounts of data to perform its tasks. This means that the model is not truly intelligent in the way that humans are, as it cannot reason or make decisions based on limited information.

Despite these limitations, the researchers at Microsoft are optimistic about the future of GPT-4 and its potential to revolutionize the field of AI. They believe that the model’s ability to solve complex tasks will lead to new breakthroughs in areas such as medicine, finance, and engineering, and that it will ultimately pave the way for the development of true AGI.

The report concludes by stating that GPT-4 is still in the early stages of development, and that there is much work to be done before it can be considered truly intelligent. “We recognize the current limitations of GPT-4 and that there is still work to be done,” a Microsoft spokesperson told Vice.

“We will continue to engage the broader scientific community in exploring future research directions, including those required to address the societal and ethical implications of these increasingly intelligent systems.”

and then

and then