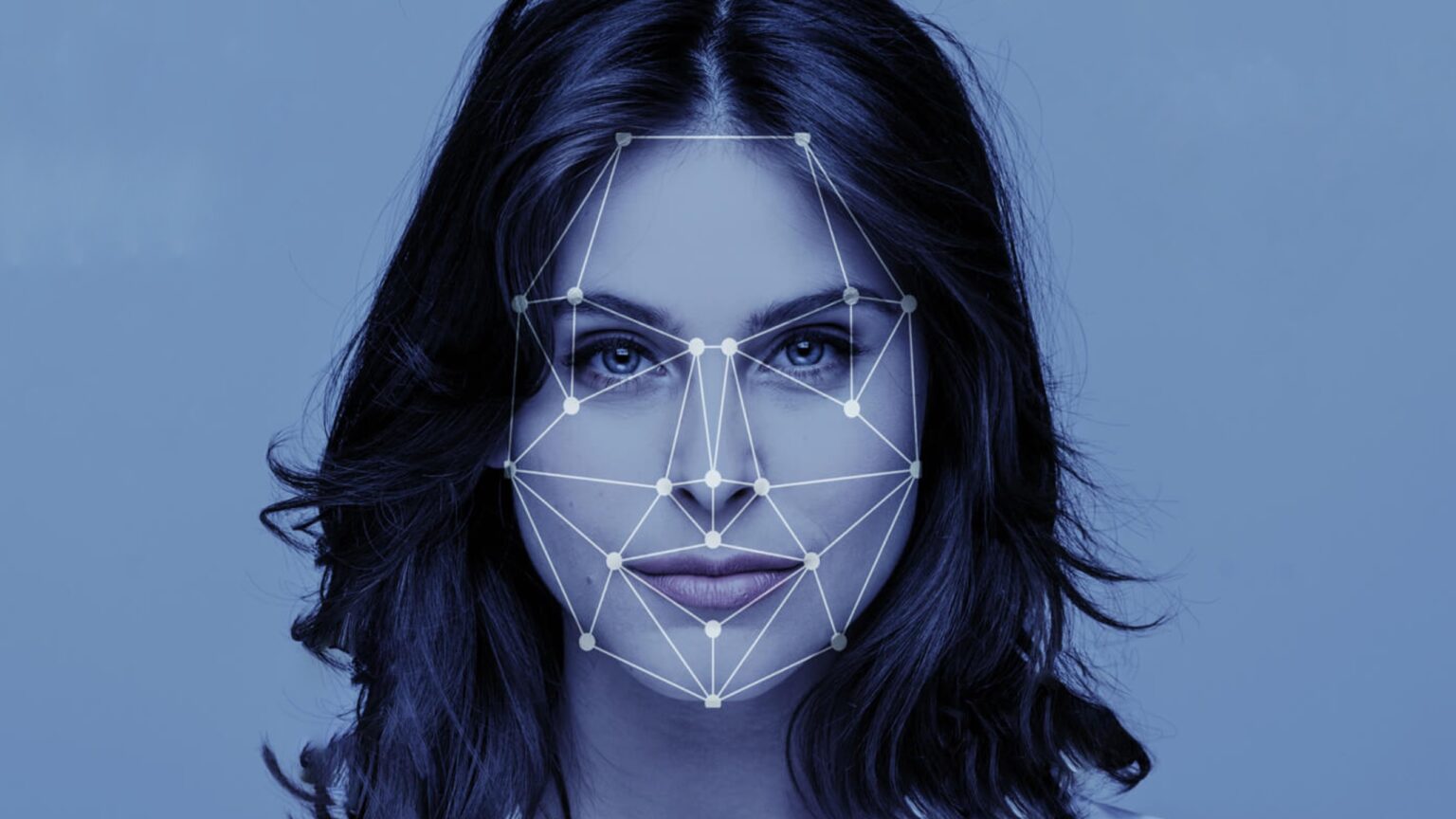

Facial recognition technology has rapidly woven into our everyday lives, from unlocking smartphones to personalized advertising.

Yet, as it becomes increasingly omnipresent, so does the debate around its use’s ethical and legal boundaries. A leading tech reporter, Kashmir Hill of The New York Times, has recently shed light on a particularly secretive firm in this sector: Clearview AI. Hill’s investigation suggests that this stealthy startup may be dramatically redefining privacy norms, all while under the radar of public scrutiny.

We're standing on the brink of a world in which facial recognition runs amok and anonymity is impossible. I think it's still possible to intervene before that becomes fully normalized.

Read my new book on this which gets a nice shoutout in this story! https://t.co/L5Xit64fdH https://t.co/xoMjIbG2iR

— Kashmir Hill (@kashhill) September 25, 2023

Clearview AI’s alarming capabilities

A powerful facial recognition tool is at the heart of Clearview AI’s offering. Simply upload a photo of an individual, and the tool promises to show you everywhere that face appears online. This includes major platforms like Facebook, Instagram, and LinkedIn, as well as even less obvious ones like Venmo. It doesn’t just bring up profiles; it could expose pictures you weren’t even aware were online, potentially compromising privacy on an unprecedented scale.

A tool rife with controversy

While the advantages for law enforcement are clear—solving crimes, locating suspects, and more—the potential misuse is disconcerting. Hill paints a few harrowing hypotheticals: a woman exiting a clinic being identified and harassed by protestors, or a stranger at a bar using the tool to unearth a wealth of personal data about someone they’ve just met. This lack of control over personal images has raised alarm bells in many quarters.

Further aggravating concerns are the technology’s accuracy—or potential lack thereof. Instances of misidentification, especially among people of color, have been reported. This not only amplifies racial biases in policing but can have grave implications for those wrongfully accused.

From Google’s Labs to Clearview’s offerings

Interestingly, Clearview AI is not the first to venture into this controversial realm. Tech behemoths like Google and Facebook have previously dabbled in similar technologies. However, recognizing the potential pitfalls, they chose to withhold their versions from public use. This self-imposed restraint makes Clearview AI’s strides even more significant.

Clearview AI didn’t just develop innovative technology; they also capitalized on a unique data-gathering method. They systematically scraped billions of photos from the internet, pulling from many sources without explicit permission from the platforms or individuals featured.

Clearview’s under-the-radar operations

For much of its early existence, Clearview AI remained enigmatic. Even locating their office proved challenging for Hill, as the given address led to a non-existent building. Early attempts to contact any company representatives hit walls. However, as Hill deepened her investigation, she uncovered that Clearview was tracking her moves. Every time law enforcement attempted to run her image through Clearview’s system, the company got alerted and often intervened, showcasing its invasive surveillance capabilities.

The growing push for regulatory oversight

With such powerful technology at their fingertips and a demonstrated willingness to use it aggressively, calls for regulatory oversight of Clearview AI have grown louder. Some states in the U.S., including California, Colorado, Virginia, and Connecticut, have put legal provisions in place, allowing residents to request their data be deleted from Clearview’s database.

However, more than these piecemeal regulations might be needed. As Clearview AI and companies like it continue to push the envelope on facial recognition, a comprehensive national or even global approach may be essential to protecting citizens’ privacy.

Kashmir Hill’s revelations regarding Clearview AI underscore the urgent need for public discourse on the boundaries of facial recognition technology. As the line between public and private blurs, society must decide where the limits lie and who can draw them.

and then

and then