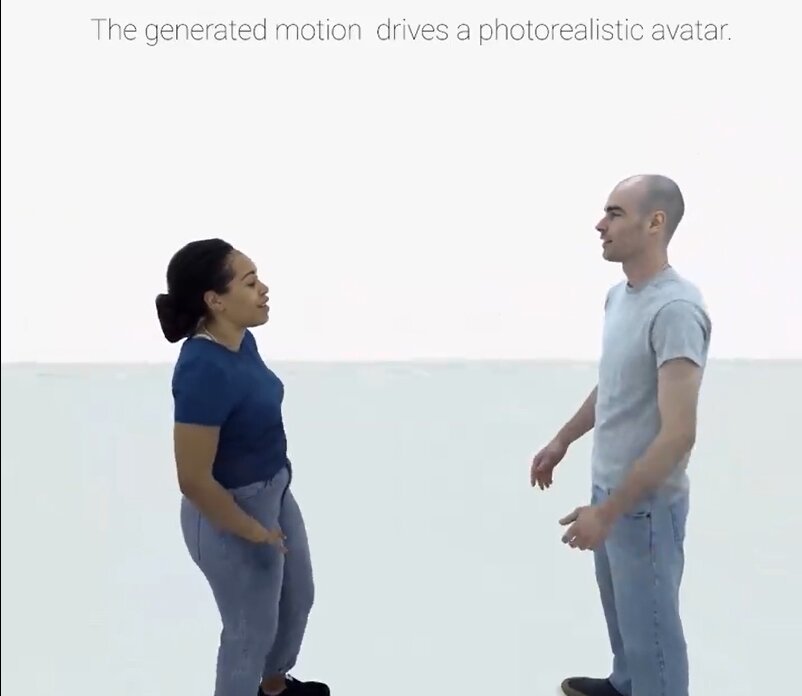

Meta has introduced another AI concept into the metaverse industry. According to a recent tweet by Allen T., an AI educator and developer, the company released a new framework called Audio2Photoreal.

Audio2Photoreal is a framework for generating full-bodied photorealistic avatars that are naturally gesture-driven by the author’s voice. These avatars come to life through speech audio that is integrated into the gestural motion of humans.

Given raw audio speech from individuals, a model is created to generate corresponding photorealistic gestures. The system consists of two generative models that represent an avatar’s expression codes and body poses.

According to clips uploaded by Allen T, different parts of an individual, including the mouth, hands, and face, are affected by this addition.

Meta introduces Audio2Photoreal

Generating full-bodied photorealistic avatars that naturally gesture driven by the author's voice

The metaverse is going to be fun.

6 fun examples and demo below: pic.twitter.com/EeImouEakC

— Allen T (@Mr_AllenT) January 4, 2024

Some of the demos released are multiple samples generated, two personal conversations, a sample of female avatars generated, and guide-poses driving the diffusion model. Allen T. added that the metaverse is going to be fun with this development. Elsewhere, the tech community seems excited following comments on the post. A user @EverettWorld tweeted, “If Metaverse looks like this, I’m in!”

However, another user agitated that he doesn’t trust Meta much anymore. According to @AIandDesign, Meta is harmful to humans following the whole Cambridge Analytica thing. The user adds,

“This is all so cool. I just wish it wasn’t Meta. I don’t trust them much anymore. After the whole Cambridge Analytica thing, I’m totally done with Meta. They are harmful to humanity. Literally. I’m on FB but only for family stuff.”

The technology behind the Audio2Photoreal concept

ArXiv, a curated research-sharing platform open for scientists to share research before it has been peer-reviewed, gave more insights on Audio2Photoreal.

The body motion of an avatar is synthesized using a diffusion model conditioned on audio or text, respectively. For the facial motion, an audio-conditioned diffusion model was constructed from the audio input.

However, the body and face follow highly different dynamics, where the face is strongly correlated with the input audio while the body has a weaker correlation with speech.

Importance of Audio2Photoreal in the metaverse

Meta’s involvement in the Metaverse is aimed at making the ecosystem more realistic. These Audio2Photoreal avatars can mirror an individual’s facial expression and body gestures through the use of audio.

It creates a connection similar to when individuals are having a face-to-face conversation. The person will have unique physical traits like height, skin and hair color, body shape, and other accurate properties. Working in the Metaverse ecosystem becomes more flexible as it requires no webcams, videos, or quality smartphone cameras.

Facebook, X, and Instagram sue Ohio to stop social media law

In another recent development, NetChoice, a company representing social media platforms like Facebook, Instagram, and X, filed a lawsuit against Ohio’s new social media law on Jan. 5.

The company released a 34-page lawsuit to block Ohio’s Social Media Parental Notification Act, which would have gone into effect on Jan. 15, but only on accounts created after the said date.

The social media law insists that platforms should get parent consent for their users aged 16 or less. However, the lawsuit states that the law would “place a significant hurdle on some minors’ ability to engage in speech on those websites.”

and then

and then