Meta, the tech firm formerly known as Facebook, is adding artificial intelligence to its Ray-Ban smart glasses, taking wearable technology to the next level.

The company flaunted its Meta AI chatbot in a blog post, which can now see, hear, and identify objects through the built-in camera and microphone. This modernism hints at a hands-free user experience, enhancing their functionality and potential applications.

Also read: AI Powered by Human Brain Cells Achieves Speech Recognition

“Hey, Meta” – A Voice-Activated Assistant

Ray-Ban Meta smart glasses users can now engage with the Meta AI chatbot by simply saying, “Hey, Meta,” as a wake-up phrase. This voice-activated assistant is poised to alter how individuals interact with wearable devices. Users can not only communicate with the chatbot but also command it to take photos and even suggest captions for the images captured, adding a new dimension to the documentation of their lives.

“You can also take a photo, either by voice or by using the capture button, and within 15 seconds, say “Hey Meta…” to ask a question about the photo.”

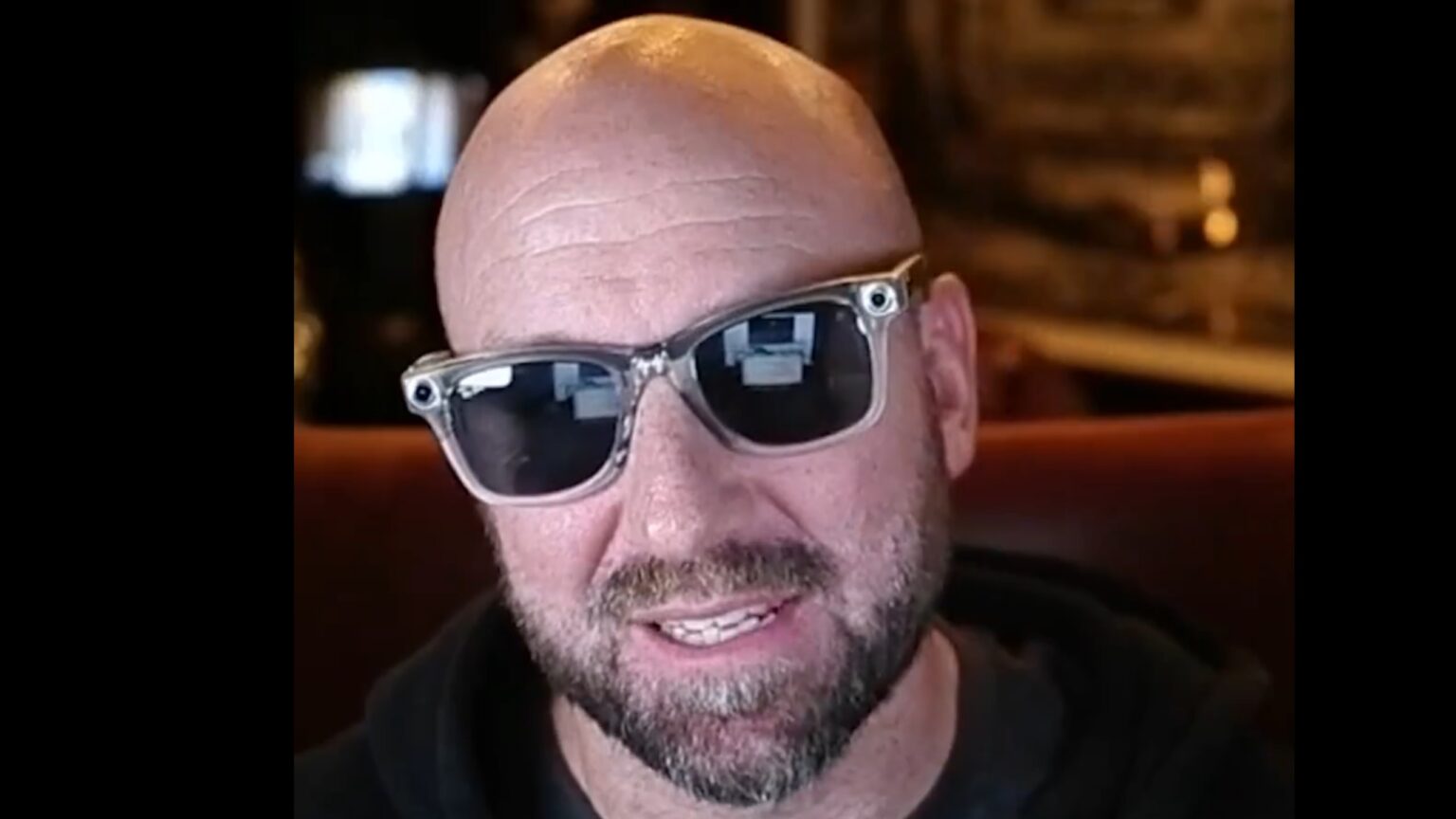

In a demonstration shared by Mark Zuckerberg on Instagram, the Meta CEO showcased the chatbot’s capabilities. He asked the AI to suggest pants that would complement a shirt he had in hand. The chatbot responded by accurately describing the shirt and offering a couple of suggestions for pants that would go well with it. This feature could be a game-changer for those seeking fashion advice or looking to streamline their wardrobe choices.

https://www.instagram.com/p/C0w4Agjvq5_/

The AI assistant doesn’t stop at fashion recommendations. It can also translate text, making it a valuable tool for travelers or anyone with language barriers. Furthermore, it can provide information about objects, helping users gain insight into their surroundings. These capabilities bring to mind other AI products from tech firms like Microsoft and Google, emphasizing the growing integration of AI in our daily lives.

A window to the world

On the same accord, CTO Andrew Bosworth shared a video in which the AI assistant accurately described a lit-up, California-shaped wall sculpture. This demonstration highlights the glasses’ ability to provide information about the world, enhancing a broader understanding of and interaction with the environment.

https://www.instagram.com/p/C0w5mMnPj4B/

While these features appear to be transformative, Meta is initially offering them to a select group of users in the United States as part of an early access program. Andrew Bosworth mentioned that the test period would be limited to “a small number of people who opt-in.” This exclusivity adds an element of intrigue to the technology, leaving many wondering when they will have the chance to experience the potential of these AI-powered smart glasses.

The integration of Bing into the glasses also opens up opportunities for real-time information retrieval. Users can ask the glasses questions like, “Hey Meta, is there a pharmacy close by?” and receive up-to-date information from the web. This feature could prove invaluable in various scenarios, from finding nearby services to getting timely updates on local events.

“In addition to the early access program, we’re beginning to roll out the ability for Meta AI on the glasses to retrieve real-time information, powered in part by Bing.”

A comprehensive experience

To ensure a seamless user experience, the Meta View app facilitates the pairing of the smart glasses with a smartphone. It not only assists in connectivity but also serves as a repository for all AI responses and associated photos. This feature allows users to conveniently access their queries and interactions in one place, enhancing the overall utility of the device.

and then

and then