Researchers have unearthed over 100 malicious machine learning (ML) models on the Hugging Face AI platform that can enable attackers to inject malicious code onto user machines.

Although Hugging Face implements security measures, the findings highlight the growing risk of “weaponizing” publicly available models as they can create a backdoor for attackers.

The findings by JFrog Security Research form part of an ongoing study to analyze how hackers can use ML to attack users.

Harmful content

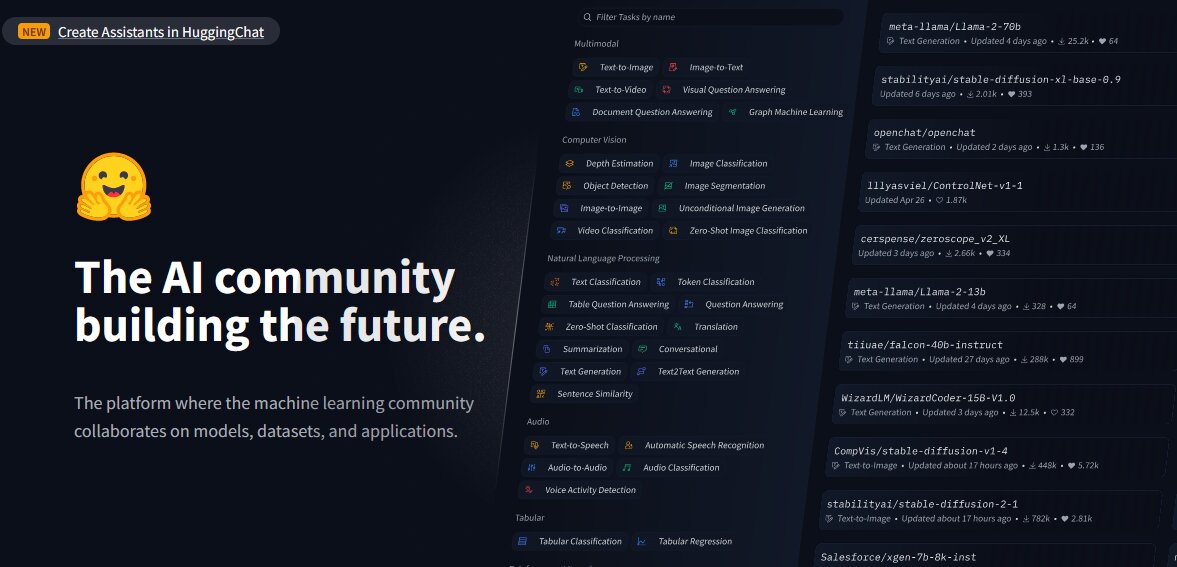

According to an article by Computing, the researchers developed an advanced scanning system to scrutinize models hosted on the Hugging Face AI platform, such as PyTorch and Tensorflow Keras.

Hugging Face is a platform developed for sharing AI models, datasets, and applications. Upon analyzing the models, the researchers discovered harmful payloads “within seemingly innocuous models.”

This is despite the fact that Hugging Face carries out security measures like malware and pickle scanning. However, the platform does not restrict the download of models that could be potentially harmful and also enables publicly available AI models to be abused and weaponized by users.

On examining the platform and the existing models, JFrog’s security researchers discovered about 100 AI models with malicious functionality, according to their report.

Some of these models, the report indicates, are capable of executing code on users’ machines, “thereby creating a persistent backdoor for attackers.”

The researchers also indicated that such findings exclude false positives. These, they said, are an accurate representation of the prevalence of malicious models on the platform.

Also read: Apple Redirects Car Team to AI Post-EV Market Slowdown

The examples

According to JFrog’s report, one of the “alarming” cases involves a PyTorch model. The model was reportedly uploaded by a user identified as “baller423,” which was subsequently deleted from the Hugging Face platform.

On further scrutinizing the model, the researchers noticed that it contained a malicious payload, enabling it to establish a reverse shell on a specified host (210.117.212.93).

JFrog senior security researcher David Cohen said: “(It) is notably more intrusive and potentially malicious, as it establishes a direct connection to an external server, indicating a potential security threat rather than a mere demonstration of vulnerability,” he wrote.

This leverages “Python’s pickle module’s ‘_reduce_’ method to execute arbitrary code upon loading the model file, effectively bypassing conventional detection methods.”

The researchers also recognized that the same payload was creating connections to different IP addresses, “suggesting operators may be researchers rather than malicious hackers.”

A wake-up call

The JFrog team noted the findings are a wake-up call for Hugging Face, showing its platform is prone to manipulation and potential threats.

“These incidents serve as poignant reminders of the ongoing threats facing Hugging Face repositories and other popular repositories such as Kaggle, which could potentially compromise the privacy and security of organizations utilizing these resources, in addition to posing challenges for AI/ML engineers,” said the researchers.

This comes as cybersecurity threats the world over are on the increase, fueled by the proliferation of AI tools, with bad actors abusing them for malicious intentions. Hackers are also using AI to advance phishing attacks and trick people.

However, the JFrog team made other discoveries.

A playground for researchers

The researchers also noted that Hugging Face has evolved into a playground for researchers “who wish to combat emerging threats, as demonstrated by the diverse array of tactics to bypass its security measures.”

For instance, the payload uploaded by “baller423” initiated a reverse shell connection to an IP address range that belongs to Kreonet (Korea Research Environment Open Network).

According to Dark Reading, Kreonet is a high-speed network in South Korea supporting advanced research and educational activities; “therefore, it’s possible that AI researchers or practitioners may have been behind the model.”

“We can see that most ‘malicious’ payloads are actually attempts by researchers and/or bug bounty to get code execution for seemingly legitimate purposes,” said Cohen.

Despite the legitimate purposes, the JFrog team, however, warned that the strategies employed by researchers clearly demonstrate that platforms like Hugging Face are open to supply-chain attacks. These, according to the team, can be customized to focus on specific demographics, like AI or ML engineers.

and then

and then