Malicious players use artificial intelligence-generated videos on YouTube to lure people into downloading harmful malware that steals essential information.

Within the last six months, CloudSEK wrote that it discovered a 300% increase in videos on YouTube that leads to malware platforms like RedLine, Vidar, and Racoon through their descriptions.

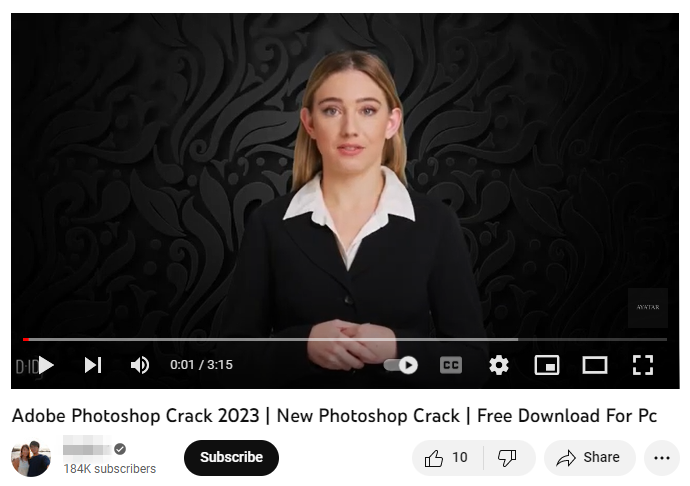

According to the digital security firm, these videos are uploaded as tutorials for online users who want to download cracked versions of popular paid software like AutoCAD, Autodesk 3ds Max, Microsoft Office Suite, Photoshop, and others.

The malicious players use these AI-generated videos to trick viewers into inputting their personal information and downloading the infostealer malware. When this is done, users’ data, including credit card info, bank details, passwords, crypto wallet data, and sensitive documents, are uploaded directly to the malware owner’s control server, leading to dire consequences.

Experts warn of growing of AI-Powered frauds

Several experts have warned about how malicious players could use AI to spread fraud. With AI algorithms becoming more sophisticated, fraudsters can use them to generate convincing phishing scams, fake news, and deep fakes.

Fraudsters leverage artificial intelligence and machine learning to create more convincing and complex scams while automating the fraud process to scale their efforts. This makes it increasingly difficult for traditional methods of detection to keep pace.

To combat these AI-powered frauds, experts have recommended using AI and machine learning technologies to detect and prevent fraudulent activities. AI can help identify fraud patterns, analyze large volumes of data, and improve fraud detection accuracy.

Additionally, experts have recommended that people stay vigilant when using the web. According to them, users should educate themselves about the latest fraud trends and tactics, use strong passwords, and implement multi-factor authentication to protect their accounts against these players.

“As if people didn’t have enough to worry about when using today’s internet, now AI-generated YouTube videos enter the picture,” said Matt Ridley, founder of cybersecurity firm Ascent Cyber.

“Consumers need to be on high alert as these scams are becoming more difficult to spot. Certainly companies like ours will be putting AI to our own use to counteract such frauds, but everyday users are the first line of defence and must remain vigilant.”

AI needs more regulation

As AI technology advances, it poses a significant risk to users, especially regarding fraud. The sophistication of AI algorithms means that fraudsters can use them to create convincing phishing scams, fake news, and others that are difficult to distinguish from genuine content.

Besides that, there is a growing concern about the potential misuse of AI to commit fraud and the need for regulations to protect users from these risks. In a recent Twitter exchange, OpenAI’s CEO Sam Altman said, “we definitely need more regulation on AI.”

Other stakeholders have argued that these regulations should focus on ensuring transparency in the use of AI, protecting user privacy, and holding companies accountable for the benefit of their AI systems.

AI regulations can set standards for further developments and see to the implementation of ethical considerations that prioritize transparency and accountability. A robust regulatory framework will also ensure that AI is designed to benefit society and not just the interests of businesses or governments.

and then

and then