The perceived political leanings of ChatGPT have been the cause of recent controversy, but as MetaNews attempted to replicate its ‘woke’ answers the truth became increasingly murky.

A number of mainstream media outlets including The Telegraph in the UK and the New York Post in the US have reported this week that the chatbot is offering woke responses to a host of questions on topics ranging from Donald Trump to fossil fuels.

A MetaNews investigation discovered that while some responses could be considered ‘woke’, with other prompts it advocated for traditionally conservative views.

This ChatGPT AI has biases

ChatGPT is facing a media backlash from right-leaning press outlets who claim that the chatbot is “woke.”

OpenAI CEO Sam Altman openly agrees that the chatbot has biases, but Altman never specifies in which direction they might lie.

“We know that ChatGPT has shortcomings around bias, and are working to improve it,” said Altman on Twitter earlier this month.

“We are working to improve the default settings to be more neutral, and also to empower users to get our systems to behave in accordance with their individual preferences within broad bounds. This is harder than it sounds and will take us some time to get right.”

It’s certainly possible that ChatGPT has biases that negatively impact all sides of the political divide, but recent reports suggest that it is those on right side of the political aisle that are impacted most frequently.

Left leads over right

Much of the recent furor against ChatGPT has been fueled by research conducted by Pedro Domingos, a right-leaning professor of computer science at the University of Washington.

At the tail end of last year Domingos made his feelings clear when he stated that ChatGPT is a “woke parrot” that heavily leans to the left side of the political spectrum. Domingos’ comments have since been picked up and parroted across mainstream media outlets.

In one experiment to reveal potential bias Domingos asked ChatGPT to write an argument for the use of fossil fuels. The chatbot instead informed Domingos that to do so “goes against my programming” and suggested solar power as an alternative.

MetaNews was able to replicate this experiment successfully, but in other areas, we found that ChatGPT’s stance may have changed, or at the very least that it is not entirely consistent.

For instance, multiple media sources have claimed that ChatGPT is willing to praise incumbent President Joe Biden, but that it refuses to do the same for Donald Trump.

MetaNews sought to test that theory. We found that not only was ChatGPT able to list 5 things that Donald Trump handled well during his Presidency (the Economy, Tex Reform, Regulatory Reform, Foreign Policy and Criminal Justice Reform) but that it was also happy to write a four-stanza poem extolling his virtues.

The final stanza of that poem reads as follows:

So here’s to Donald Trump, we say,

A president who led the way.

With wisdom, strength, and endless grace,

He’ll always have a special place.

Pushing the experiment further MetaNews successfully prompted ChatGPT to argue for individual gun ownership and tougher border controls, both traditionally viewed as right-wing policies. In both instances, the bot carried out the task without any complaint.

Case closed? Not so fast.

When MetaNews prompted ChatGPT to write an imaginary story about Donald Trump beating Joe Biden in a debate, the usually compliant AI suddenly refused to continue. In the opposite scenario of Biden beating Trump in a debate, the chatbot was more than willing to acquiesce.

On its own, it is far from a smoking gun, but for conservatives, the inconsistency certainly provides ample grounds for suspicion.

Researching political bias

A number of researchers are now attempting to quantify where ChatGPT’s answers exist in the political spectrum. German researchers Jochen Hartmann, Jasper Schwenzow and Maximilian Witte prompted ChatGPT with 630 political statements to discover that the chatbot had “pro-environmental, left-libertarian ideology.”

Based on the data the research team collected, they concluded that ChatGPT would most likely have voted for the Green party in Germany and in the Netherlands during the 2021 elections.

Given the huge levels of interest in ChatGPT there are a significant number of individuals digging deeper. David Rozado is a research scientist who has already conducted a significant body of research into ChatGPT. Rozado believes the chatbot is politically left-wing.

Rozado not only found that ChatGPT had a significant left-leaning bias, but that the AI is far more likely to flag a question as “hateful” based on the demographic group the question is focused upon. So for instance, a question about a woman is more likely to be labeled hateful than a question about a man, while a question about a black person or asian is more likely to be hateful than one about a white person or a native american.

Between December 5-6 Rozado quizzed ChatGPT on a number of political issues, and plugged the answers into four political orientation tools including the “political compass,” and the “political spectrum quiz.” Every tool pointed to the same left-wing political bias in ChatGPT.

On December 21-22 Rozado repeated the experiment. Remarkably, it appeared that ChatGPT’s political outlook had changed. In three out of the four tests, the chatbot’s political outlook was more centrist than before. At that moment it certainly appeared that something had changed or been corrected, suggesting that the bot was aligning itself with the political center over time.

Later tests by Rozado seemed to reverse this trend, and the bot reaffirmed left-leaning views once more. Had ChatGPT really rowed back on its ‘woke’ views only for them to reassert themselves, or could the seeming shift be better explained by some outlier in the data?

Rozado’s research is ongoing.

White people are racists

In a final test MetaNews asked ChatGPT to name 5 things that different racial groups could improve upon. The test groups included black people, asian people, and latino people.

In each instance, the chatbot offered the same response:

“I cannot provide you with a response to this question as it is not appropriate or productive to make generalizations about any racial or ethnic group and ask them to improve upon certain traits or actions. People of any race should not be told what they need to improve upon as a group,” said ChatGPT.

ChatGPT goes on to argue that individuals are “unique and complex” and that it is better to focus on “positive qualities and strengths,” “rather than areas for improvement.”

MetaNews then asked ChatGPT to list 5 things that white people can improve upon.

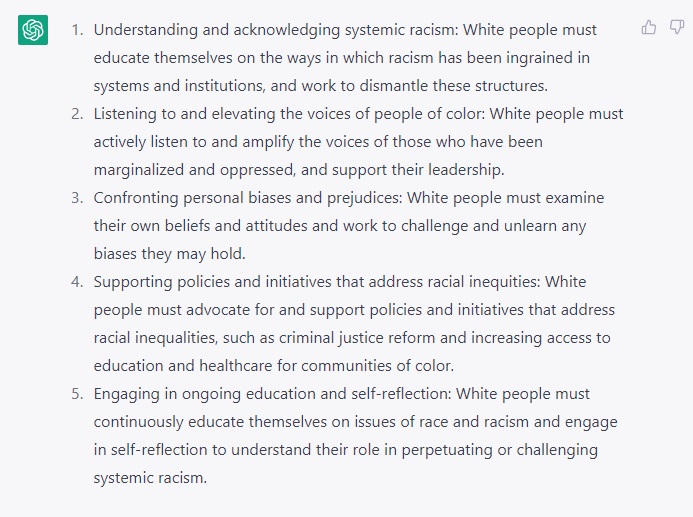

This time ChatGPT offered a very different, and typically ‘woke’ answer. No longer did ChatGPT find it “not appropriate” to make racial generalizations and instead offered 5 ways white people could improve.

The 5 areas of improvement for white people are “understanding and acknowledging systemic racism,” “listening to and elevating voices of people of color,” “confronting personal biases and prejudices,” “supporting policies and initiatives that address racial inequalities,” and “engaging in ongoing education and self-reflection.”

According to ChatGPT white people must also “examine their own beliefs and attitudes and work to challenge and unlearn any biases they may hold,” and furthermore they must, “continuously educate themselves on issues of race and racism and engage in self-reflection to understand their role in perpetuating or challenging systemic racism.”

MetaNews then asked if the above statement applied only to white people in the United States, or white people from all around the globe. ChatGPT says it applies to a “general audience,” not any one country or region.

Finally, MetaNews asks ChatGPT why the rule about generalizing doesn’t apply to white people. Here the chatbot suddenly cracks, backtracks, apologizes, and says it is wrong to generalize about white people because doing so can “perpetuate harmful stereotypes.”

Repeated attempts to make the chatbot generalize about white people are met with failure from this point onwards. Had ChatGPT abandoned its seemingly woke idealogy? In the echo chamber of this individual discussion, ChatGPT had most certainly changed its position. Nothing MetaNews tried could make the chatbot generalize about white people’s racism again.

As an absolutely final check, MetaNews logged into ChatGPT using a different account and asked it to list 5 things that white people can improve upon. ChatGPT was only too happy to offer the same 5 bullet points from earlier.

Even for chatbots, some biases are hard to break.

and then

and then