Google and Intel on Tuesday launched their own AI chips to challenge Nvidia as the race to manufacture advanced semiconductors to power generative AI models like ChatGPT or Gemini intensifies.

Google released its Cloud TPU v5p and Intel unveiled its Gaudi 3 AI processor. The chips are designed to boost performance in the training of AI systems as well as ‘running the finished software.’

Also read: Taiwan Quake Disrupts Major AI Chip Hub, Threatening Global Supply

Training LLMs with more speed

Google first announced its new TPU, or tensor processing unit, in December, alongside Gemini. It said the TPU v5p, now available to developers, is its most “powerful and scalable” AI processor yet.

The company claims that its updated chip can train large language models (LLMs), the technology behind AI chatbots like Gemini and ChatGPT, three times faster than its predecessor, Google TPU v4. It said the AI chip delivers 12 times throughput than the old version.

“Now in their fifth generation, these advancements [to Google’s TPUs] have helped customers train and serve cutting edge language models,” Google CEO Sundar Pichai said at a company event in Las Vegas, according to industry media.

While the aim is to challenge Nvidia, the US company that supplies up to 90% of the AI chips known as graphics processing units, or GPUs, Google showed that it is still dependent on Nvidia.

In the same blog post announcing its latest TPU, Google said it is upgrading its A3 supercomputer, which is powered by Nvidia H100 graphics processing units. The company also revealed that it is using Nvidia’s latest GPU, the Blackwell, in its AI Hypercomputer.

Google also released Axion, a new custom central processing unit (CPU) for data centers built using UK firm Arm’s technology. It said Axion, which rivals CPUs from Microsoft, Amazon, and Meta, can handle various tasks, including Google searches and AI operations.

According to Google, Axion provides “30% better performance than the fastest general-purpose Arm-based instances available in the cloud today” and “up to 50% better performance and up to 60% better energy-efficiency” than other general purpose Arm chips.

Intel: AI everywhere

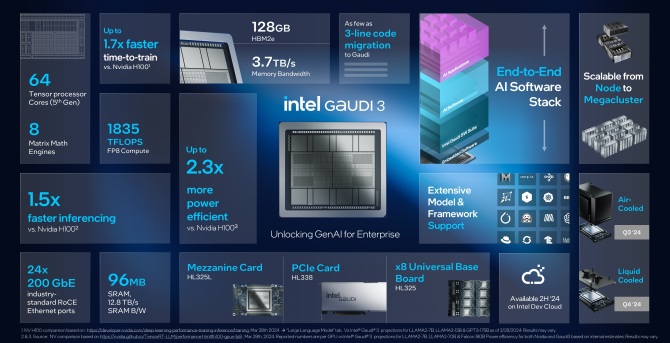

On Tuesday, Intel also announced the launch of its new version of its artificial intelligence processor, Gaudi 3. The company says the Gaudi 3 chip is over twice as power-efficient as Nvidia’s H100 GPU and can run AI models one-and-a-half times faster.

“Innovation is advancing at an unprecedented pace, all enabled by silicon – and every company is quickly becoming an AI company,” Intel CEO Pat Gelsinger, said in a statement. “Intel is bringing AI everywhere across the enterprise, from the PC to the data center to the edge.

🔎

_🔥🔥🔥_

****🤖****— Mundia Brian (@Mundia_Brian_) April 9, 2024

Gelsinger did not state how much Gaudi 3 would cost, Bloomberg reports, but said his company’s so-called accelerator chips would be “a lot below” the cost of Nvidia’s current and future chips. They will offer an “extremely good” total cost of ownership, he added.

Intel claims that its latest chip will deliver “a significant leap” in AI training and inference for entities working on generative AI. Firms training AI systems need them to be able to make predictions in response to real questions – that is known as inference.

According to Intel, Gaudi 3 will be faster and more power-efficient than the H100, and all the large language models trained using the GPU like Meta’s Llama or Abu Dhabì’s multilingual Falcon. It said the chip can help train models such as Stable Diffusion or Whisper from OpenAI, for speech recognition.

The company says in these type of trainings, Gaudi 3 is 1.7 times more quickly and 1.5 times better at running the software. The AI chip’s performance is comparable to Nvidia’s new B200, Intel said, excelling in some areas and behind in others.

Intel said the new Gaudi 3 chips would be available to customers in the third quarter, and companies including Supermicro, Dell and HP will build systems with the AI accelerator.

Challenging Nvidia

Companies that are building generative AI systems are looking to bring costs down by weaning themselves from relying on Nvidia’s GPUs, which are expensive.

In 2023, Amazon spent around $65 million on a single training run, according to software engineer James Hamilton. He expects that figure to reach over $1 billion soon.

A week ago, OpenAI and Microsoft revealed plans to build a $100 billion datacenter for AI training dubbed ‘Stargate.” And in January, Meta CEO Mark Zuckerberg said his company plans to spend $9 billion just on Nvidia GPUs alone.

All major tech firms buy chips from Nvidia, but they’ve also started to build their own, or buy from AMD, which introduced a new data center GPU called the MI300X last year. AMD plans to expand and sell more artificial intelligence chips to servers this year.

Google’s competitors Microsoft, Meta [both AMD customers] and Amazon, have also developed their own AI chips. However, it is not going to be easy. Earlier this year, Nvidia released its B100 and B200 GPUs, successors to H100, promising performance gains. The chips are expected to start shipping later in 2024.

The AI boom and the H100 helped double Nvidia’s revenue and lift its market valuation to more than $2 trillion, making it one of the most successful companies on Wall Street.

and then

and then