The same technology which underpins AI-generative art is being leveraged to create new proteins for medicine, fueling hopes of new treatments for a range of sicknesses and diseases.

It is hoped that the creation of new proteins will help our bodies to fight illness in ways that were previously impossible.

Also read: Apple Plans To Launch Reality VR Headset This Fall

Speeding up the process

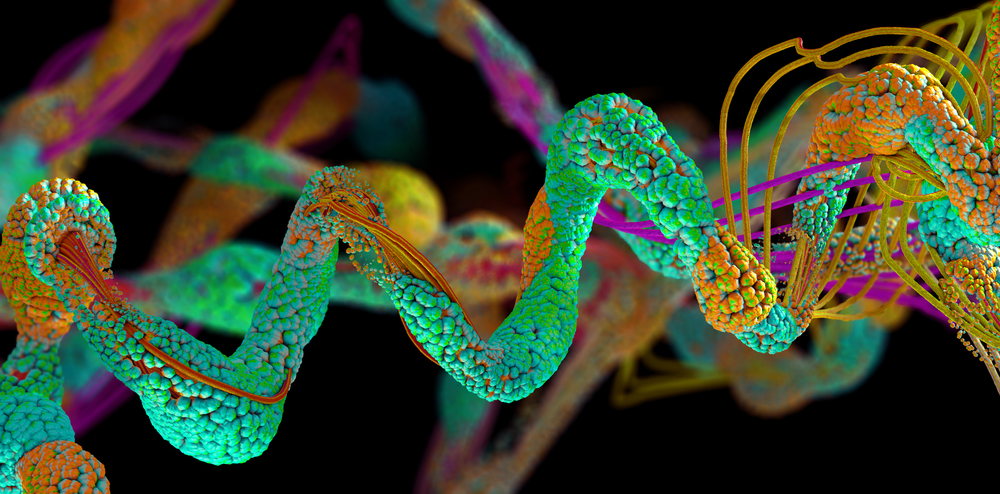

Proteins are an important part of how our body accomplishes everyday tasks from digesting food to transporting oxygen molecules through our cardiovascular system.

The 20,000 different proteins which handle these tasks are a known quantity, but new proteins could hold the potential to new abilities and better healthcare outcomes.

Doctor David Baker, the director of the Institute for Protein Design at the University of Washington, is one of the key figures working on new “artisanal” protein designs. In 2017 the team proved that it was possible to design and create new proteins that would take on the shape they wanted.

The work and research continue but are now turbocharged by artificial intelligence.

“What we need are new proteins that can solve modern-day problems, like cancer and viral pandemics,” Dr. Baker told the New York Times on Monday.

Dr Baker went on to add that with the aid of AI “we can design these proteins much faster, and with much higher success rates, and create much more sophisticated molecules that can help solve these problems.”

At this stage, the design of protein blueprints is shortened from years to weeks. Just last year Dr. Baker published a paper detailing how AI could accelerate protein design. These papers and the technologies which underpin them have already been superseded by further advancements in AI.

The neural network technology of AI-generative art models such as DALL-E and MidJourney is a further advancement on what scientists previously viewed possible.

Neural networks advance medicine

Neural networks learn by analyzing huge amounts of data. For example, by analyzing thousands of pictures of bicycles the system can eventually learn to recognize a bicycle. DALL-E was trained by analyzing millions of images and the text captions that described them. In this way, the system learns to recognize the connections between the images and words.

When a prompter provides a description to DALL-E, the neural network generates a set of key features that the image may contain. A second neural network, known as a diffusion model, generates the pixels needed to create these features.

A similar technique is used to create new protein models. Proteins are comprised of compounds that fold and twist in three dimensions to determine their behavior. AI labs such as Google’s DeepMind have proven that neural networks can predict the shape of a protein based on its compounds alone.

Nate Bennett, a researcher working at the University of Washington told NYT how these AI assistants can speed up the process in previously impossible ways.

“One of the most powerful things about this technology is that, like DALL-E, it does what you tell it to do,” said Bennett. “From a single prompt, it can generate an endless number of designs.”

Namrata Anand, an entrepreneur, and former Stanford University researcher agrees with Bennett on the benefits.

“With DALL-E, you can ask for an image of a panda eating a shoot of bamboo,” said Anand. “Equivalently, protein engineers can ask for a protein that binds to another in a particular way, or some other design constraint, and the generative model can build it.”

The future beckons

For now, AI-generated medicine has focused on speeding up processes, and shortening development times by considerable orders of magnitude.

The next stage is to open up new fields of research that human minds cannot accomplish or perhaps even conceive of on their own.

and then

and then