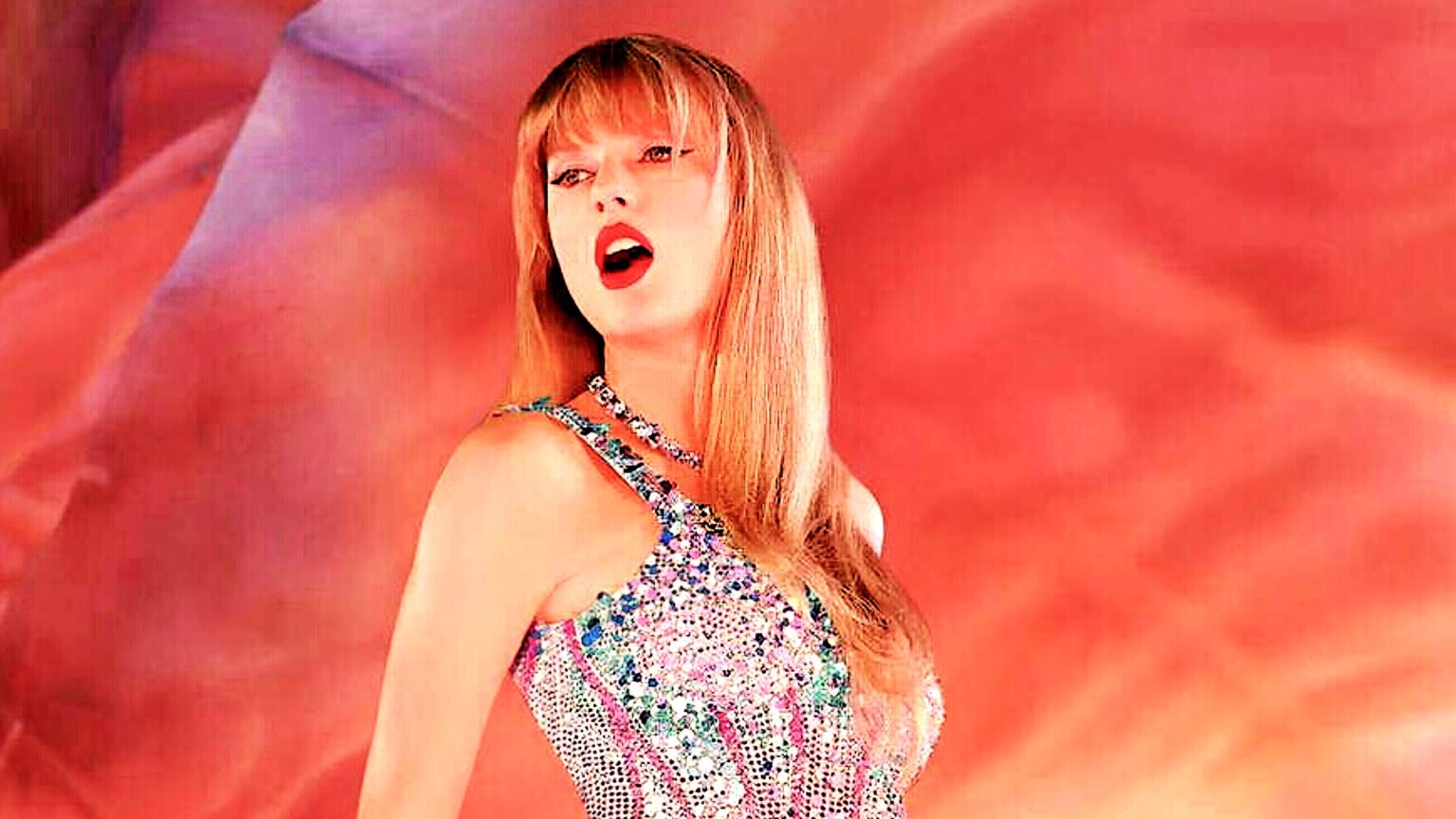

Images of high-profile female celebrities, including Taylor Swift, Natalie Portman, and Emma Watson, have been manipulated using AI technology to create erotic or pornographic content, as deepfake X-rated material surges, according to the Daily Mail.

A new report by New York-based software company ActiveFence found that the number of platforms on the open web “discussing and sharing” celebrity deepfake porn during the seven months to August this year soared by 87% compared to the same period in 2022.

‘No one is safe’

While A-list stars in music and the movie industries have been the primary targets of the illicit sexual content, researchers warn that “no one is safe”. ActiveFence said the number of private individuals “undressed” using AI spiked nearly 400% over the past year.

“Today, we are seeing that it is affecting private individuals because it’s so immediate, so fast,” Amir Oneli, a researcher at ActiveFence, told the Mail. He was referring to the efficiency with which AI can generate fake sexual images or films, putting people’s lives at risk.

“The most tragic thing is that no one is safe,” he said.

The naked celebrity deepfake pictures are made using a type of generative AI tool that is able to undress people who are fully clothed in still images. The face of a celebrity like Taylor Swift can be superimposed onto the body of someone else engaging in pornographic sexual acts.

According to ActiveFence, the photos of the celebrities targeted in these attacks were taken from their social media or dating profiles. For example, fake AI-created porn videos “featuring singer Swift have been viewed hundreds of thousands of times,” the report says.

It does not require great skill for horny individuals to access the perverted material. A simple Google search will surface a range of readily available celebrity deepfakes. Oneli is worried that a lot of women, both high and low, could become victims of AI-created “revenge porn”.

Also read: AI ‘Nudes’ of Young Girls Spark Outrage in Spanish Town

The researcher partly blamed technology companies such as Microsoft, OpenAI and Google for making the problem worse. In February, for instance, Facebook-owner Meta open-sourced its AI tech, allowing novice developers to customize their AI chatbots to create deepfake porn.

OpenAI has often said that its chatbots are trained to refuse prompts related to sex, racism, child abuse, and other such malcontent. Likewise, both Google and Microsoft emphasized that they have implemented comprehensive guardrails to prevent abuse.

The AI Boom

Now, the AI porn industry is booming, said the online safety firm ActiveFence. A flurry of sites are mushrooming on the Internet, with fans sharing online artificial intelligence tools that can supposedly “undress anybody”.

There are plenty of ‘how-to guides,’ including advice on selecting the right pictures to get anyone naked. Millions of people are paying to access the explicit AI sexual content each month, the Mail reported.

MrDeepFakes, a popular fake porn website, boasts 17 million users per month, according to web analytics company SimilarWeb. ActiveFence found that some websites charge as little as $6 to download fake porn content, and prices can reach $560 for 2,000 images.

For $400, some websites offer users the chance to skip the queue via a so-called ‘fast pass’ ticket, per the Mail report. When users buy longer versions of AI porn from MrDeepFakes, they are redirected to Fan-Topia, a site that claims to pay highly for adult sexual content creations.

“The fact that it’s [MrDeepFakes] even allowed to operate and is known is a complete indictment of every regulator in the space, of all law enforcement, of the entire system,’ tech legal expert Noelle Martin told NBC.

The U.S. Congress is still debating the Preventing Deepfakes of Intimate Images Bill, which is aimed at stopping the sharing of illicit X-rated sexual material. The bill was introduced in May.

and then

and then