The creator of GPTZero claims his app can quickly and efficiently detect whether an essay is written by a human mind or artificial intelligence (AI), but overwhelming demand crashed the service soon after launch.

Edward Tian, the creator of GPTZero, believes his creation will assist in detecting what he calls “AI plagiarism” helping the likes of high school teachers to grade papers and assignments with confidence.

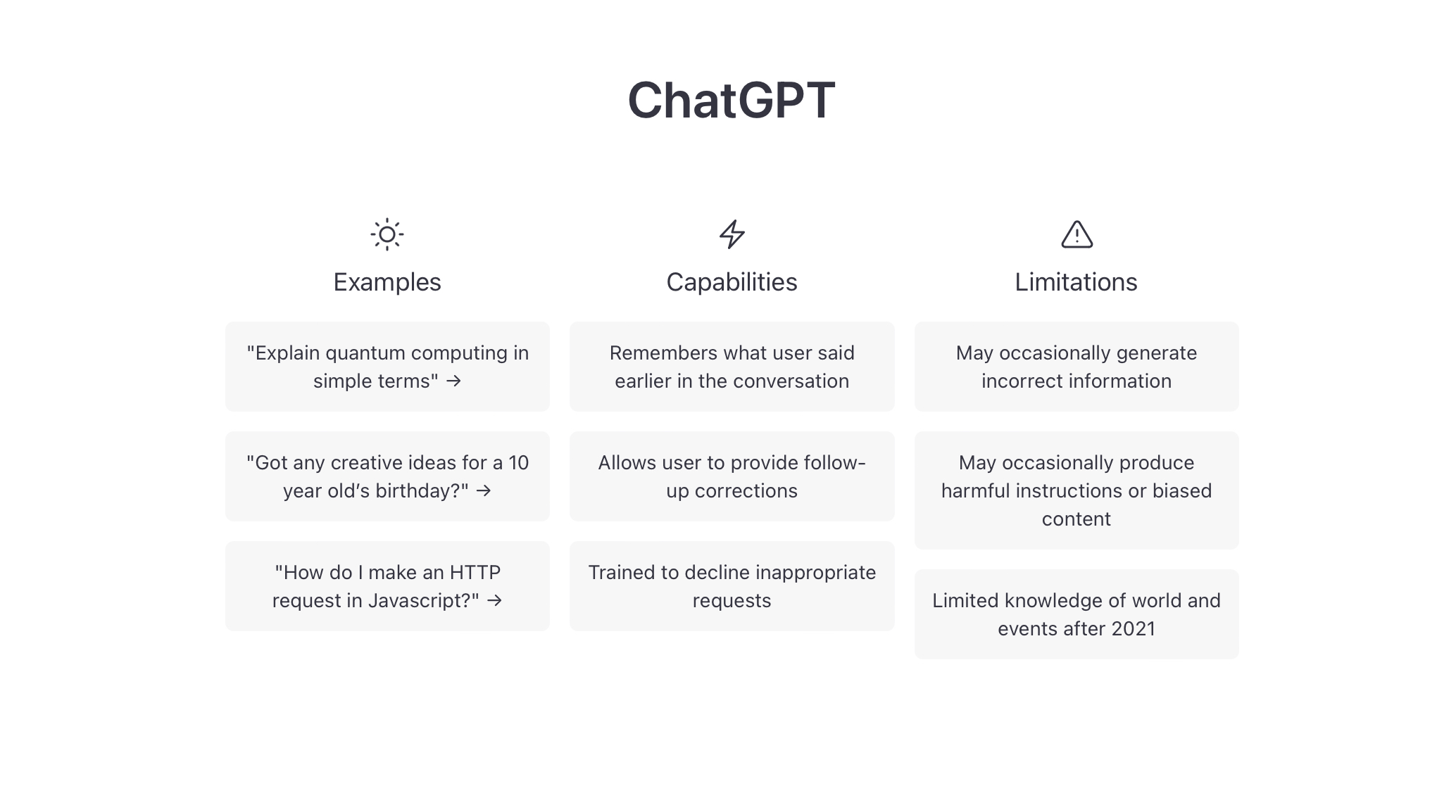

Chatbots make content creation easy

The launch of chatbots such as ChatGPT and You.com has created a headache for anyone trying to separate hard work from copied work.

According to Tian, who wrote GPTZero over the New Year period, the app should be of assistance to teachers in particular.

“The motivation here is increasing AI plagiarism,” said Tian on Twitter this week before asking, “are high school teachers going to want students using ChatGPT to write their history essays? Likely not.”

Tian wrote the program with assistance from Sreejan Kumar, a PhD candidate at Princeton University.

As MetaNews previously reported, a prompter can easily instruct a Chatbot to write a specified number of words on any topic of their choosing. A prompter can also choose to specify that this document is written in an essay or report style. It’s certainly not a stretch to imagine that a student could swiftly convert any school assignment into a chatbot prompt.

That makes the idea of a chatbot detector extremely compelling, but for now, GPTZero is a victim of its own success. The sheer demand for the app has crashed it more than once in a matter of days, despite Tian’s hosting service upping its hosting and memory.

How GPTZero detects ‘AI plagiarism’

The complete inner workings of GPTZero and its bot detection model are said to be the subject of a future academic paper. In the meantime, Tian has revealed a few details about how GPTZero works in between the down times.

Tian states that one measure used is called “perplexity” which, “is a metric of randomness, or the degree of uncertainty a model has in predicting (assigning probabilities) to a text. Lower perplexity = more AI preferred.”

Tian points out that perplexity is just one measure among many since some human written texts also have low perplexity. Another measure is “burstiness”, or the uniformity of perplexity over time. AIs have more uniform perplexity whereas human writers have variable perplexity.

Hopefully, the final research paper can shed further light on the somewhat abstract concept of perplexity.

Can one bot detect another bot?

With GPTZero struggling to bear the load of demand, MetaNews investigated whether one chatbot could detect the work of another.

MetaNews first prompted You.com with several writing assignments, including on the subject of determining whether an essay was written by a human or a chatbot. These assignments were then submitted to ChatGPT following the prompt, “Is the following text written by a human or a chatbot?:”

In every instance, ChatGPT confidently declared, “The text you provided was written by a human. There are no obvious indicators that the text was generated by a chatbot or AI.”

The simple experiment indicates that bots do not make good detectors of bot-written content.

ChatGPT did go on to elucidate on the tell-tale signs of bot written content. These included “unnatural phrasings or sentence structures” as well as “words or phrases that seem out of place or do not fit in context.”

At this stage in the development of AI-generated content, it seems that most bots have mostly surpassed these early limitations making their work increasingly difficult to detect.

Until Edward Tian and his Harvard friends reveal the secrets of perplexity it seems that chatbots and high school teachers alike may remain equally perplexed by AI-created essays.

and then

and then